Impact

- Test of Time Honorable Mention Award, ACM Multimedia

J. Müller, F. Alt, D. Michelis, and A. Schmidt. Requirements and Design Space for Interactive Public Displays. In Proceedings of the International Conference on Multimedia (MM’10), Association for Computing Machinery, New York, NY, USA, 2010, p. 1285–1294. doi:10.1145/1873951.1874203

[BibTeX] [Abstract] [Download PDF]Digital immersion is moving into public space. Interactive screens and public displays are deployed in urban environments, malls, and shop windows. Inner city areas, airports, train stations and stadiums are experiencing a transformation from traditional to digital displays enabling new forms of multimedia presentation and new user experiences. Imagine a walkway with digital displays that allows a user to immerse herself in her favorite content while moving through public space. In this paper we discuss the fundamentals for creating exciting public displays and multimedia experiences enabling new forms of engagement with digital content. Interaction in public space and with public displays can be categorized in phases, each having specific requirements. Attracting, engaging and motivating the user are central design issues that are addressed in this paper. We provide a comprehensive analysis of the design space explaining mental models and interaction modalities and we conclude a taxonomy for interactive public display from this analysis. Our analysis and the taxonomy are grounded in a large number of research projects, art installations and experience. With our contribution we aim at providing a comprehensive guide for designers and developers of interactive multimedia on public displays.

@InProceedings{mueller2010mm, author = {M\"{u}ller, J\"{o}rg and Alt, Florian and Michelis, Daniel and Schmidt, Albrecht}, booktitle = {{Proceedings of the International Conference on Multimedia}}, title = {{Requirements and Design Space for Interactive Public Displays}}, year = {2010}, address = {New York, NY, USA}, note = {mueller2010mm}, pages = {1285--1294}, publisher = {Association for Computing Machinery}, series = {MM'10}, abstract = {Digital immersion is moving into public space. Interactive screens and public displays are deployed in urban environments, malls, and shop windows. Inner city areas, airports, train stations and stadiums are experiencing a transformation from traditional to digital displays enabling new forms of multimedia presentation and new user experiences. Imagine a walkway with digital displays that allows a user to immerse herself in her favorite content while moving through public space. In this paper we discuss the fundamentals for creating exciting public displays and multimedia experiences enabling new forms of engagement with digital content. Interaction in public space and with public displays can be categorized in phases, each having specific requirements. Attracting, engaging and motivating the user are central design issues that are addressed in this paper. We provide a comprehensive analysis of the design space explaining mental models and interaction modalities and we conclude a taxonomy for interactive public display from this analysis. Our analysis and the taxonomy are grounded in a large number of research projects, art installations and experience. With our contribution we aim at providing a comprehensive guide for designers and developers of interactive multimedia on public displays.}, acmid = {1874203}, doi = {10.1145/1873951.1874203}, isbn = {978-1-60558-933-6}, keywords = {design space, interaction, public displays, requirements}, location = {Firenze, Italy}, numpages = {10}, timestamp = {2010.09.01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/mueller2010mm.pdf}, }

Best Papers and Honorable Mentions

- Honorable Mention, SportsHCI‘25

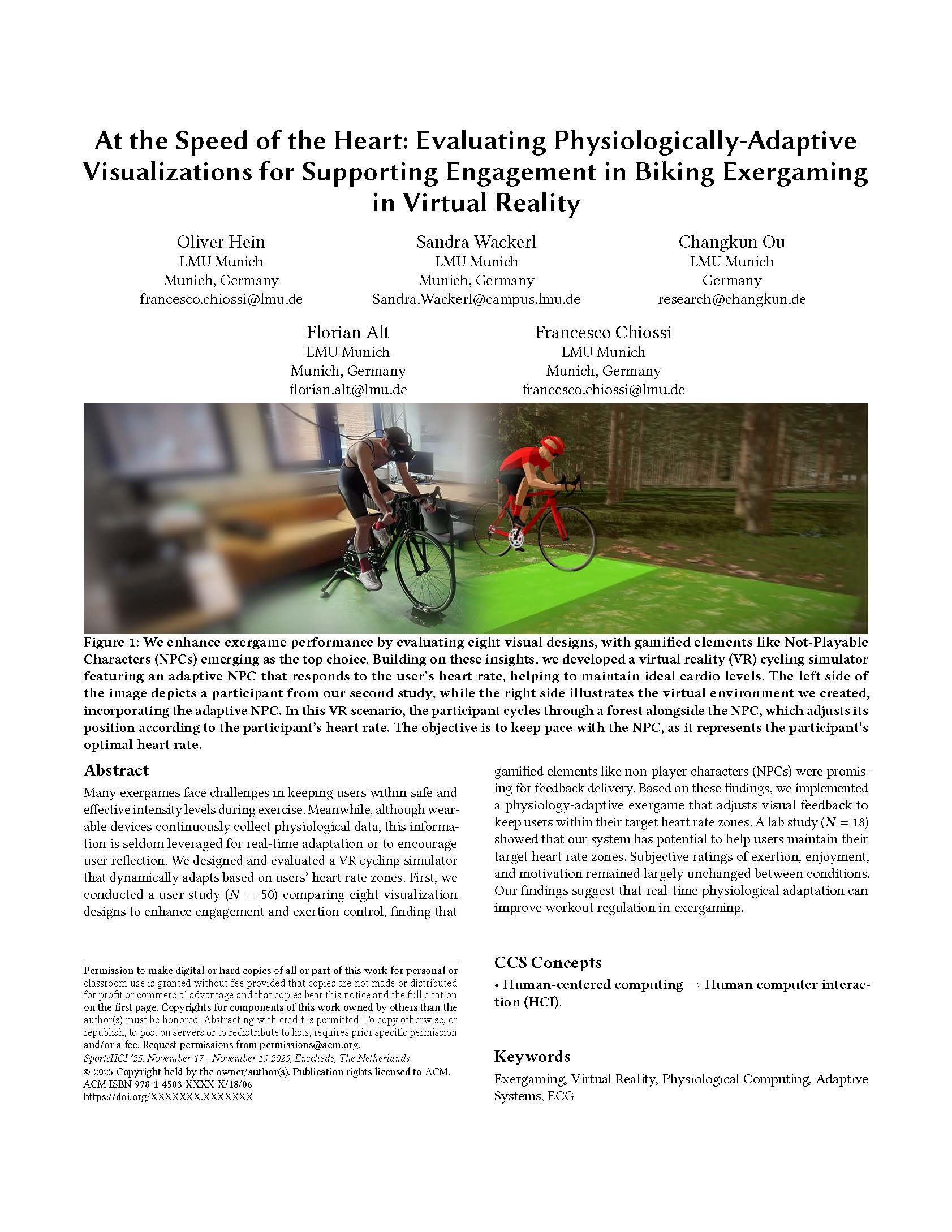

O. Hein, S. Wackerl, C. Ou, F. Alt, and F. Chiossi. At the speed of the heart: evaluating physiologically-adaptive visualizations for supporting engagement in biking exergaming in virtual reality. In Proceedings of the first annual conference on human-computer interaction and sports (SportsHCI ’25), Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3749385.3749398

[BibTeX] [Abstract] [Download PDF]Many exergames face challenges in keeping users within safe and effective intensity levels during exercise. Meanwhile, although wearable devices continuously collect physiological data, this information is seldom leveraged for real-time adaptation or to encourage user reflection. We designed and evaluated a VR cycling simulator that dynamically adapts based on users’ heart rate zones. First, we conducted a user study (N = 50) comparing eight visualization designs to enhance engagement and exertion control, finding that gamified elements like non-player characters (NPCs) were promising for feedback delivery. Based on these findings, we implemented a physiology-adaptive exergame that adjusts visual feedback to keep users within their target heart rate zones. A lab study (N = 18) showed that our system has potential to help users maintain their target heart rate zones. Subjective ratings of exertion, enjoyment, and motivation remained largely unchanged between conditions. Our findings suggest that real-time physiological adaptation through NPC visualizations can improve workout regulation in exergaming.

@InProceedings{hein2025sportshci, author = {Hein, Oliver and Wackerl, Sandra and Ou, Changkun and Alt, Florian and Chiossi, Francesco}, booktitle = {Proceedings of the First Annual Conference on Human-Computer Interaction and Sports}, title = {At the Speed of the Heart: Evaluating Physiologically-Adaptive Visualizations for Supporting Engagement in Biking Exergaming in Virtual Reality}, year = {2025}, address = {New York, NY, USA}, note = {hein2025sportshci}, publisher = {Association for Computing Machinery}, series = {SportsHCI '25}, abstract = {Many exergames face challenges in keeping users within safe and effective intensity levels during exercise. Meanwhile, although wearable devices continuously collect physiological data, this information is seldom leveraged for real-time adaptation or to encourage user reflection. We designed and evaluated a VR cycling simulator that dynamically adapts based on users’ heart rate zones. First, we conducted a user study (N = 50) comparing eight visualization designs to enhance engagement and exertion control, finding that gamified elements like non-player characters (NPCs) were promising for feedback delivery. Based on these findings, we implemented a physiology-adaptive exergame that adjusts visual feedback to keep users within their target heart rate zones. A lab study (N = 18) showed that our system has potential to help users maintain their target heart rate zones. Subjective ratings of exertion, enjoyment, and motivation remained largely unchanged between conditions. Our findings suggest that real-time physiological adaptation through NPC visualizations can improve workout regulation in exergaming.}, articleno = {15}, doi = {10.1145/3749385.3749398}, isbn = {9798400714283}, keywords = {Exergaming, Virtual Reality, Physiological Computing, Adaptive Systems, ECG}, numpages = {18}, timestamp = {2025.11.20}, url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025sportshci.pdf}, }

- Best Paper Award, 10th International XR-Metaverse Conference 2025

P. Rauschnabel, R. Felix, M. Meissner, F. Alt, and C. Hinsch. Spatial computing: key concepts, implications, and research agenda. In Proceedings of the international xr-metaverse conference 2025 (XRM ’25), Springer, 2025.

[BibTeX] [Abstract]Spatial computing has recently emerged as a promising concept in business, driven by technological advancements and key industry players. In 2023, Apple introduced the term spatial computing alongside the launch of the Apple Vision Pro headset, marking a significant shift in how this technology is perceived and applied across industries. However, while concepts such as Extended Reality or xReality (XR), Augmented Reality (AR), Virtual Reality (VR), and the Metaverse are well-established in both management practice and academic literature, research on spatial computing remains sparse, particularly in a business context. The current research (work-in-progress) addresses this gap by (1) delineating spatial computing from related concepts, and (2) developing a conceptual framework that identifies spatial computing core elements, outcomes, and strategies

@InProceedings{rauschnabel2025xrm, author = {Philipp Rauschnabel AND Reto Felix AND Martin Meissner AND Florian Alt AND Chris Hinsch}, booktitle = {Proceedings of the International XR-Metaverse Conference 2025}, title = {Spatial Computing: Key Concepts, Implications, and Research Agenda}, year = {2025}, note = {rauschnabel2025xrm}, publisher = {Springer}, series = {XRM '25}, abstract = {Spatial computing has recently emerged as a promising concept in business, driven by technological advancements and key industry players. In 2023, Apple introduced the term spatial computing alongside the launch of the Apple Vision Pro headset, marking a significant shift in how this technology is perceived and applied across industries. However, while concepts such as Extended Reality or xReality (XR), Augmented Reality (AR), Virtual Reality (VR), and the Metaverse are well-established in both management practice and academic literature, research on spatial computing remains sparse, particularly in a business context. The current research (work-in-progress) addresses this gap by (1) delineating spatial computing from related concepts, and (2) developing a conceptual framework that identifies spatial computing core elements, outcomes, and strategies}, timestamp = {2025.04.10}, }

- Best of CHI Honorable Mention Award, CHI’24

S. D. Rodriguez, P. Chatterjee, A. D. Phuong, F. Alt, and K. Marky. Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ’24), Association for Computing Machinery, New York, NY, USA, 2024. doi:10.1145/3613904.3642863

[BibTeX] [Abstract] [Download PDF]This paper explores how personal attributes, such as age, gender, technological expertise, or “need for touch”, correlate with people’s preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants’ preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants’ perceptions of the established tangible privacy mechanisms were their “need for touch” and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.

@InProceedings{delgado2024chi, author = {Sarah Delgado Rodriguez AND Priyasha Chatterjee AND Anh Dao Phuong AND Florian Alt AND Karola Marky}, booktitle = {{Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems}}, title = {{Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms}}, year = {2024}, address = {New York, NY, USA}, note = {delgado2024chi}, publisher = {Association for Computing Machinery}, series = {CHI ’24}, abstract = {This paper explores how personal attributes, such as age, gender, technological expertise, or “need for touch”, correlate with people’s preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants’ preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants’ perceptions of the established tangible privacy mechanisms were their “need for touch” and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.}, doi = {10.1145/3613904.3642863}, isbn = {979-8-4007-0330-0/24/05}, location = {Honolulu, HI, USA}, timestamp = {2024.05.16}, url = {http://florian-alt.org/unibw/wp-content/publications/delgado2024chi.pdf}, video = {delgado2024chi}, }

- Honorable Mention Award, SUI’23

K. Pfeuffer, J. Obernolte, F. Dietz, V. Mäkelä, L. Sidenmark, P. Manakhov, M. Pakanen, and F. Alt. Palmgazer: unimanual eye-hand menus in augmented reality. In Proceedings of the 2023 acm symposium on spatial user interaction (SUI ’23), Association for Computing Machinery, New York, NY, USA, 2023. doi:10.1145/3607822.3614523

[BibTeX] [Abstract] [Download PDF]How can we design the user interfaces for augmented reality (AR) so that we can interact as simple, flexible and expressive as we can with smartphones in one hand? To explore this question, we propose PalmGazer as an interaction concept integrating eye-hand interaction to establish a singlehandedly operable menu system. In particular, PalmGazer is designed to support quick and spontaneous digital commands– such as to play a music track, check notifications or browse visual media – through our devised three-way interaction model: hand opening to summon the menu UI, eye-hand input for selection of items, and dragging gesture for navigation. A key aspect is that it remains always-accessible and movable to the user, as the menu supports meaningful hand and head based reference frames. We demonstrate the concept in practice through a prototypical mobile UI with application probes, and describe technique designs specifically-tailored to the application UI. A qualitative evaluation highlights the system’s interaction benefits and drawbacks, e.g., that common 2D scroll and selection tasks are simple to operate, but higher degrees of freedom may be reserved for two hands. Our work contributes interaction techniques and design insights to expand AR’s uni-manual capabilities.

@InProceedings{pfeuffer2023sui, author = {Pfeuffer, Ken and Obernolte, Jan and Dietz, Felix and M\"{a}kel\"{a}, Ville and Sidenmark, Ludwig and Manakhov, Pavel and Pakanen, Minna and Alt, Florian}, booktitle = {Proceedings of the 2023 ACM Symposium on Spatial User Interaction}, title = {PalmGazer: Unimanual Eye-Hand Menus in Augmented Reality}, year = {2023}, address = {New York, NY, USA}, note = {pfeuffer2023sui}, publisher = {Association for Computing Machinery}, series = {SUI '23}, abstract = {How can we design the user interfaces for augmented reality (AR) so that we can interact as simple, flexible and expressive as we can with smartphones in one hand? To explore this question, we propose PalmGazer as an interaction concept integrating eye-hand interaction to establish a singlehandedly operable menu system. In particular, PalmGazer is designed to support quick and spontaneous digital commands– such as to play a music track, check notifications or browse visual media – through our devised three-way interaction model: hand opening to summon the menu UI, eye-hand input for selection of items, and dragging gesture for navigation. A key aspect is that it remains always-accessible and movable to the user, as the menu supports meaningful hand and head based reference frames. We demonstrate the concept in practice through a prototypical mobile UI with application probes, and describe technique designs specifically-tailored to the application UI. A qualitative evaluation highlights the system’s interaction benefits and drawbacks, e.g., that common 2D scroll and selection tasks are simple to operate, but higher degrees of freedom may be reserved for two hands. Our work contributes interaction techniques and design insights to expand AR’s uni-manual capabilities.}, articleno = {10}, doi = {10.1145/3607822.3614523}, isbn = {9798400702815}, keywords = {menu, augmented reality, gestures, eye-hand interaction, gaze}, location = {Sydney, NSW, Australia}, numpages = {12}, timestamp = {2023.10.08}, url = {http://www.florian-alt.org/unibw/wp-content/publications/pfeuffer2023sui.pdf}, }

- Best Paper Award, ETRA’23

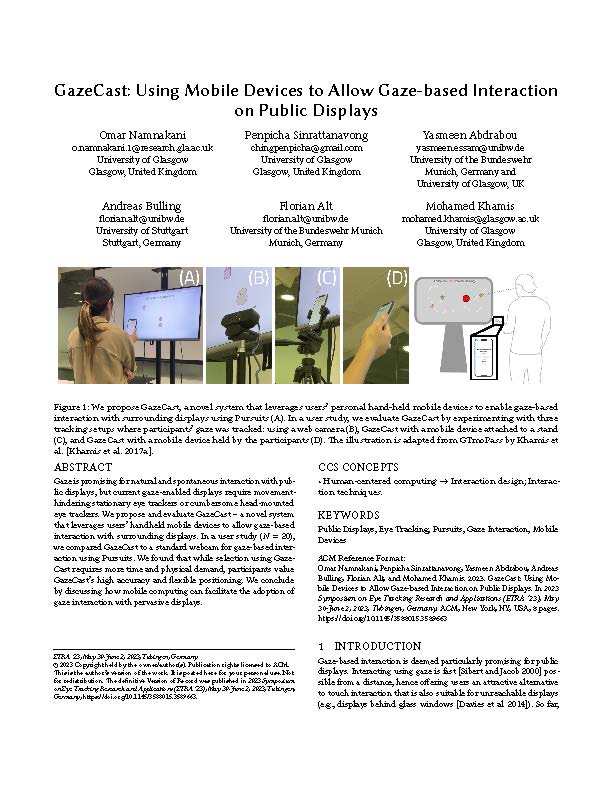

O. Namnakani, P. Sinrattanavong, Y. Abdrabou, A. Bulling, F. Alt, and M. Khamis. Gazecast: using mobile devices to allow gaze-based interaction on public displays. In Proceedings of the 2023 ACM Symposium on Eye Tracking Research & Applications (ETRA ’23), Association for Computing Machinery, New York, NY, USA, 2023. doi:10.1145/3588015.3589663

[BibTeX] [Abstract] [Download PDF]Gaze is promising for natural and spontaneous interaction with public displays, but current gaze-enabled displays require movement-hindering stationary eye trackers or cumbersome head-mounted eye trackers. We propose and evaluate GazeCast – a novel system that leverages users’ handheld mobile devices to allow gaze-based interaction with surrounding displays. In a user study (N = 20), we compared GazeCast to a standard webcam for gaze-based interaction using Pursuits. We found that while selection using GazeCast requires more time and physical demand, participants value GazeCast’s high accuracy and flexible positioning. We conclude by discussing how mobile computing can facilitate the adoption of gaze interaction with pervasive displays.

@InProceedings{namnakani2023cogain, author = {Namnakani, Omar and Sinrattanavong, Penpicha and Abdrabou, Yasmeen and Bulling, Andreas and Alt, Florian and Khamis, Mohamed}, booktitle = {{Proceedings of the 2023 ACM Symposium on Eye Tracking Research \& Applications}}, title = {GazeCast: Using Mobile Devices to Allow Gaze-Based Interaction on Public Displays}, year = {2023}, address = {New York, NY, USA}, note = {namnakani2023cogain}, publisher = {Association for Computing Machinery}, series = {ETRA '23}, abstract = {Gaze is promising for natural and spontaneous interaction with public displays, but current gaze-enabled displays require movement-hindering stationary eye trackers or cumbersome head-mounted eye trackers. We propose and evaluate GazeCast – a novel system that leverages users’ handheld mobile devices to allow gaze-based interaction with surrounding displays. In a user study (N = 20), we compared GazeCast to a standard webcam for gaze-based interaction using Pursuits. We found that while selection using GazeCast requires more time and physical demand, participants value GazeCast’s high accuracy and flexible positioning. We conclude by discussing how mobile computing can facilitate the adoption of gaze interaction with pervasive displays.}, articleno = {92}, doi = {10.1145/3588015.3589663}, isbn = {9798400701504}, keywords = {Public Displays, Mobile Devices, Gaze Interaction, Pursuits, Eye Tracking}, location = {Tübingen, Germany}, numpages = {8}, timestamp = {2023.05.30}, url = {http://www.florian-alt.org/unibw/wp-content/publications/namnakani2023cogain.pdf}, }

- Best Paper Award, ICL’22

M. Froehlich, J. Vega, A. Pahl, S. Lotz, F. Alt, A. Schmidt, and I. Welpe. Prototyping with blockchain: a case study for teaching blockchain application development at university. In Learning in the age of digital and green transition (ICL ’22), Springer International Publishing, Cham, 2023, p. 1005–1017. doi:10.1007/978-3-031-26876-2_94

[BibTeX] [Abstract] [Download PDF]Blockchain technology is believed to have a potential for innovation comparable to the early internet. However, it is difficult to understand, learn, and use. A particular challenge for teaching software engineering of blockchain applications is identifying suitable use cases: When does a decentralized application running on smart contracts offer advantages over a classic distributed software architecture? This question extends the realms of software engineering and connects to fundamental economic aspects of ownership and incentive systems. The lack of usability of today’s blockchain applications indicates that often applications without a clear advantage are developed. At the same time, there exists little information for educators on how to teach applied blockchain application development. We argue that an interdisciplinary teaching approach can address these issues and equip the next generation of blockchain developers with the skills and entrepreneurial mindset to build valuable and usable products. To this end, we developed, conducted, and evaluated an interdisciplinary capstone-like course grounded in the design sprint method with N = 11 graduate students. Our pre-/post evaluation indicates high efficacy: Participants improved across all measured learning dimensions, particularly use-case identification and blockchain prototyping in teams. We contribute the syllabus, a detailed evaluation, and lessons learned for educators.

@InProceedings{froehlich2022icl, author = {Froehlich, Michael and Vega, Jose and Pahl, Amelie and Lotz, Sergej and Alt, Florian and Schmidt, Albrecht and Welpe, Isabell}, booktitle = {Learning in the Age of Digital and Green Transition}, title = {Prototyping with Blockchain: A Case Study for Teaching Blockchain Application Development at University}, year = {2023}, note = {froehlich2022icl}, address = {Cham}, editor = {Auer, Michael E. and Pachatz, Wolfgang and R{\"u}{\"u}tmann, Tiia}, pages = {1005--1017}, publisher = {Springer International Publishing}, series = {ICL '22}, abstract = {Blockchain technology is believed to have a potential for innovation comparable to the early internet. However, it is difficult to understand, learn, and use. A particular challenge for teaching software engineering of blockchain applications is identifying suitable use cases: When does a decentralized application running on smart contracts offer advantages over a classic distributed software architecture? This question extends the realms of software engineering and connects to fundamental economic aspects of ownership and incentive systems. The lack of usability of today's blockchain applications indicates that often applications without a clear advantage are developed. At the same time, there exists little information for educators on how to teach applied blockchain application development. We argue that an interdisciplinary teaching approach can address these issues and equip the next generation of blockchain developers with the skills and entrepreneurial mindset to build valuable and usable products. To this end, we developed, conducted, and evaluated an interdisciplinary capstone-like course grounded in the design sprint method with N = 11 graduate students. Our pre-/post evaluation indicates high efficacy: Participants improved across all measured learning dimensions, particularly use-case identification and blockchain prototyping in teams. We contribute the syllabus, a detailed evaluation, and lessons learned for educators.}, doi = {10.1007/978-3-031-26876-2_94}, isbn = {978-3-031-26876-2}, timestamp = {2022-12-01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/froehlich2022icl.pdf}, }

- Honorable Mention Award, MUM’21

K. Marky, S. Prange, M. Mühlhäuser, and F. Alt. Roles Matter! Understanding Differences in the Privacy Mental Models of Smart Home Visitors and Resident. In Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia (MUM’21), Association for Computing Machinery, New York, NY, USA, 2021. doi:10.1145/3490632.3490664

[BibTeX] [Abstract] [Download PDF]In this paper, we contribute an in-depth study of the mental models of various roles in smart home ecosystems. In particular, we compared mental models regarding data collection among residents (primary users) and visitors of a smart home in a qualitative study (N=30) to better understand how bystanders’ specific privacy needs can be addressed. Our results suggest that bystanders have a limited understanding of how smart devices collect and store sensitive data about them. Misconceptions in bystanders’ mental models result in missing awareness and ultimately limit their ability to protect their privacy. We discuss the limitations of existing solutions and challenges for the design of future smart home environments that reflect the privacy concerns of users and bystanders alike, meant to inform the design of future privacy interfaces for IoT devices.

@InProceedings{marky2021mum, author = {Marky, Karola AND Prange, Sarah AND Mühlhäuser, Max AND Alt, Florian}, booktitle = {{Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia}}, title = {{Roles Matter! Understanding Differences in the Privacy Mental Models of Smart Home Visitors and Resident}}, year = {2021}, address = {New York, NY, USA}, note = {marky2021mum}, publisher = {Association for Computing Machinery}, series = {MUM'21}, abstract = {In this paper, we contribute an in-depth study of the mental models of various roles in smart home ecosystems. In particular, we compared mental models regarding data collection among residents (primary users) and visitors of a smart home in a qualitative study (N=30) to better understand how bystanders’ specific privacy needs can be addressed. Our results suggest that bystanders have a limited understanding of how smart devices collect and store sensitive data about them. Misconceptions in bystanders' mental models result in missing awareness and ultimately limit their ability to protect their privacy. We discuss the limitations of existing solutions and challenges for the design of future smart home environments that reflect the privacy concerns of users and bystanders alike, meant to inform the design of future privacy interfaces for IoT devices.}, doi = {10.1145/3490632.3490664}, location = {Leuven, Belgium}, timestamp = {2021.11.25}, url = {http://www.florian-alt.org/unibw/wp-content/publications/marky2021mum.pdf}, }

- Honorable Mention Award, DIS’21

M. Froehlich, C. Kobiella, A. Schmidt, and F. Alt. Is It Better With Onboarding? Improving First-Time Cryptocurrency App Experiences. In Designing Interactive Systems Conference 2021 (DIS ’21), Association for Computing Machinery, New York, NY, USA, 2021, p. 78–89. doi:10.1145/3461778.3462047

[BibTeX] [Abstract] [Download PDF]Engaging first-time users of mobile apps is challenging. Onboarding task flows are designed to minimize the drop out of users. To this point, there is little scientific insight into how to design these task flows. We explore this question with a specific focus on financial applications, which pose a particularly high hurdle and require significant trust. We address this question by combining two approaches. We first conducted semi-structured interviews (n=16) exploring users’ meaning-making when engaging with new mobile applications in general. We then prototyped and evaluated onboarding task flows (n=16) for two mobile cryptocurrency apps using the minimalist instruction framework. Our results suggest that well-designed onboarding processes can improve the perceived usability of first-time users for feature-rich mobile apps. We discuss how the expectations users voiced during the interview study can be met by applying instructional design principles and reason that the minimalist instruction framework for mobile onboarding insights presents itself as a useful design method for practitioners to develop onboarding processes and also to identify when not to.

@InProceedings{froehlich2021dis2, author = {Froehlich, Michael and Kobiella, Charlotte and Schmidt, Albrecht and Alt, Florian}, booktitle = {{Designing Interactive Systems Conference 2021}}, title = {{Is It Better With Onboarding? Improving First-Time Cryptocurrency App Experiences}}, year = {2021}, address = {New York, NY, USA}, note = {froehlich2021dis2}, pages = {78–89}, publisher = {Association for Computing Machinery}, series = {DIS '21}, abstract = {Engaging first-time users of mobile apps is challenging. Onboarding task flows are designed to minimize the drop out of users. To this point, there is little scientific insight into how to design these task flows. We explore this question with a specific focus on financial applications, which pose a particularly high hurdle and require significant trust. We address this question by combining two approaches. We first conducted semi-structured interviews (n=16) exploring users’ meaning-making when engaging with new mobile applications in general. We then prototyped and evaluated onboarding task flows (n=16) for two mobile cryptocurrency apps using the minimalist instruction framework. Our results suggest that well-designed onboarding processes can improve the perceived usability of first-time users for feature-rich mobile apps. We discuss how the expectations users voiced during the interview study can be met by applying instructional design principles and reason that the minimalist instruction framework for mobile onboarding insights presents itself as a useful design method for practitioners to develop onboarding processes and also to identify when not to.}, doi = {10.1145/3461778.3462047}, isbn = {9781450384766}, numpages = {12}, owner = {florian}, timestamp = {2021.06.25}, url = {http://www.florian-alt.org/unibw/wp-content/publications/froehlich2021dis2.pdf}, video = {froehlich2021dis2}, }

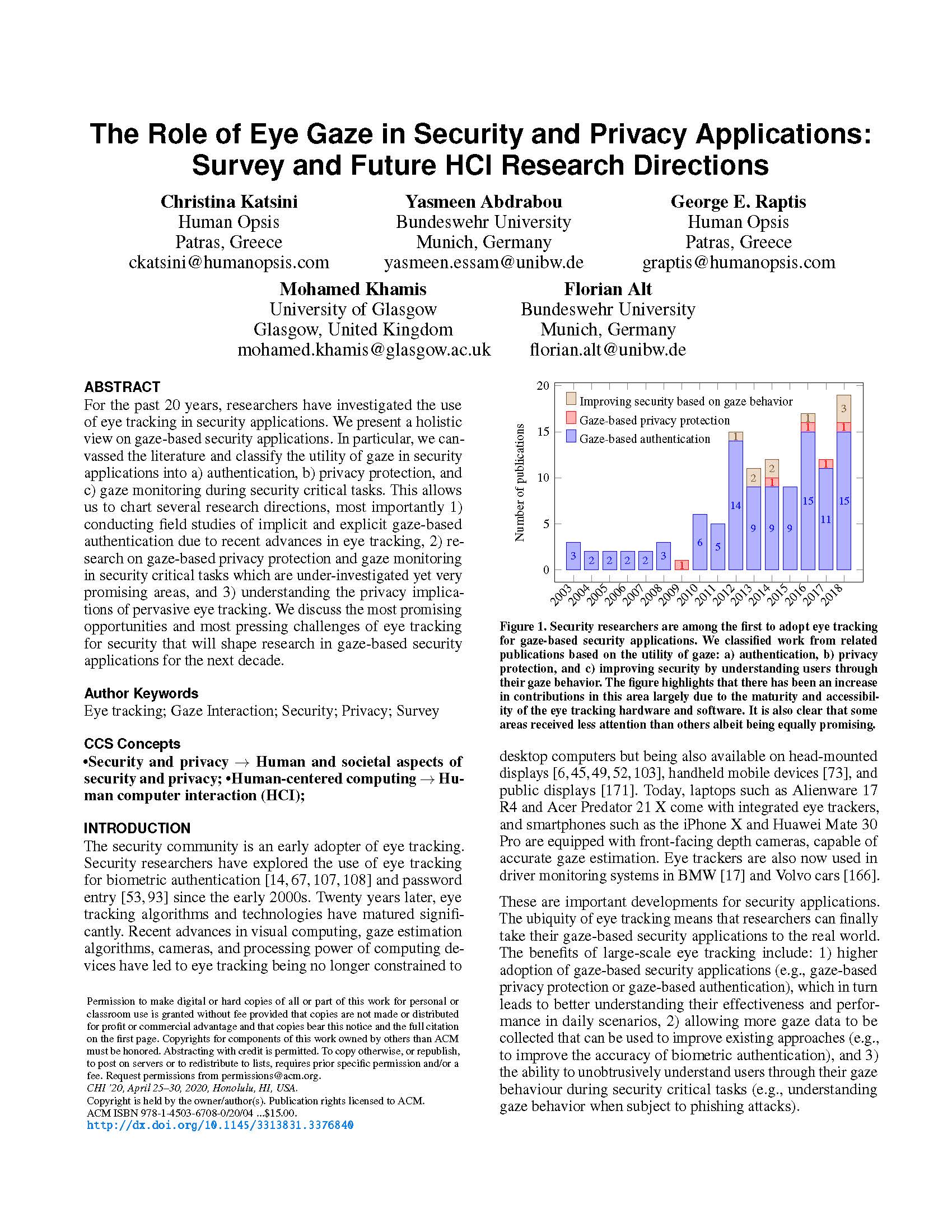

- Best of CHI Honorable Mention Award, CHI’20

C. Katsini, Y. Abdrabou, G. E. Raptidis, M. Khamis, and F. Alt. The Role of Eye Gaze in Security and Privacy Applications:Survey and Future HCI Research Directions. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20), Association for Computing Machinery, New York, NY, USA, 2020. doi:10.1145/3313831.3376840

[BibTeX] [Abstract] [Download PDF]For the past 20 years, researchers have investigated the useof eye tracking in security applications. We present a holisticview on gaze-based security applications. In particular, we canvassedthe literature and classify the utility of gaze in securityapplications into a) authentication, b) privacy protection, andc) gaze monitoring during security critical tasks. This allowsus to chart several research directions, most importantly 1)conducting field studies of implicit and explicit gaze-basedauthentication due to recent advances in eye tracking, 2) researchon gaze-based privacy protection and gaze monitoringin security critical tasks which are under-investigated yet verypromising areas, and 3) understanding the privacy implicationsof pervasive eye tracking.We discuss the most promisingopportunities and most pressing challenges of eye trackingfor security that will shape research in gaze-based securityapplications for the next decade.

@InProceedings{katsini2020chi, author = {Christina Katsini AND Yasmeen Abdrabou AND George E. Raptidis AND Mohamed Khamis AND Florian Alt}, booktitle = {{Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems}}, title = {{The Role of Eye Gaze in Security and Privacy Applications:Survey and Future HCI Research Directions}}, year = {2020}, address = {New York, NY, USA}, note = {katsini2020chi}, publisher = {Association for Computing Machinery}, series = {CHI ’20}, abstract = {For the past 20 years, researchers have investigated the useof eye tracking in security applications. We present a holisticview on gaze-based security applications. In particular, we canvassedthe literature and classify the utility of gaze in securityapplications into a) authentication, b) privacy protection, andc) gaze monitoring during security critical tasks. This allowsus to chart several research directions, most importantly 1)conducting field studies of implicit and explicit gaze-basedauthentication due to recent advances in eye tracking, 2) researchon gaze-based privacy protection and gaze monitoringin security critical tasks which are under-investigated yet verypromising areas, and 3) understanding the privacy implicationsof pervasive eye tracking.We discuss the most promisingopportunities and most pressing challenges of eye trackingfor security that will shape research in gaze-based securityapplications for the next decade.}, doi = {10.1145/3313831.3376840}, isbn = {9781450367080}, keywords = {Eye tracking, Gaze Interaction, Security, Privacy, Survey}, location = {Honolulu, HI, US}, talk = {https://www.youtube.com/watch?v=U5r2qIGw42k}, timestamp = {2020.05.03}, url = {http://florian-alt.org/unibw/wp-content/publications/katsini2020chi.pdf}, video = {katsini2020chi}, }

- Honorable Mention Award, MobileHCI’18

M. Khamis, F. Alt, and A. Bulling. The Past, Present, and Future of Gaze-enabled Handheld Mobile Devices: Survey and Lessons Learned. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’18), Association for Computing Machinery, New York, NY, USA, 2018, p. 38:1–38:17. doi:10.1145/3229434.3229452

[BibTeX] [Abstract] [Download PDF]While first-generation mobile gaze interfaces required special-purpose hardware, recent advances in computational gaze estimation and the availability of sensor-rich and powerful devices is finally fulfilling the promise of pervasive eye tracking and eye-based interaction on off-the-shelf mobile devices. This work provides the first holistic view on the past, present, and future of eye tracking on handheld mobile devices. To this end, we discuss how research developed from building hardware prototypes, to accurate gaze estimation on unmodified smartphones and tablets. We then discuss implications by laying out 1) novel opportunities, including pervasive advertising and conducting in-the-wild eye tracking studies on handhelds, and 2) new challenges that require further research, such as visibility of the user’s eyes, lighting conditions, and privacy implications. We discuss how these developments shape MobileHCI research in the future, possibly the next 20 years.

@InProceedings{khamis2018mobilehci, author = {Khamis, Mohamed and Alt, Florian and Bulling, Andreas}, booktitle = {{Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services}}, title = {{The Past, Present, and Future of Gaze-enabled Handheld Mobile Devices: Survey and Lessons Learned}}, year = {2018}, address = {New York, NY, USA}, note = {khamis2018mobilehci}, pages = {38:1--38:17}, publisher = {Association for Computing Machinery}, series = {MobileHCI '18}, abstract = {While first-generation mobile gaze interfaces required special-purpose hardware, recent advances in computational gaze estimation and the availability of sensor-rich and powerful devices is finally fulfilling the promise of pervasive eye tracking and eye-based interaction on off-the-shelf mobile devices. This work provides the first holistic view on the past, present, and future of eye tracking on handheld mobile devices. To this end, we discuss how research developed from building hardware prototypes, to accurate gaze estimation on unmodified smartphones and tablets. We then discuss implications by laying out 1) novel opportunities, including pervasive advertising and conducting in-the-wild eye tracking studies on handhelds, and 2) new challenges that require further research, such as visibility of the user's eyes, lighting conditions, and privacy implications. We discuss how these developments shape MobileHCI research in the future, possibly the next 20 years.}, acmid = {3229452}, articleno = {38}, doi = {10.1145/3229434.3229452}, isbn = {978-1-4503-5898-9}, keywords = {eye tracking, gaze estimation, gaze interaction, mobile devices, smartphones, tablets}, location = {Barcelona, Spain}, numpages = {17}, timestamp = {2018.09.01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/khamis2018mobilehci.pdf}, }

- Best of CHI Honorable Mention Award, CHI’18

M. Khamis, C. Becker, A. Bulling, and F. Alt. Which One is Me?: Identifying Oneself on Public Displays. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Association for Computing Machinery, New York, NY, USA, 2018, p. 287:1–287:12. doi:10.1145/3173574.3173861

[BibTeX] [Abstract] [Download PDF]While user representations are extensively used on public displays, it remains unclear how well users can recognize their own representation among those of surrounding users. We study the most widely used representations: abstract objects, skeletons, silhouettes and mirrors. In a prestudy (N=12), we identify five strategies that users follow to recognize themselves on public displays. In a second study (N=19), we quantify the users’ recognition time and accuracy with respect to each representation type. Our findings suggest that there is a significant effect of (1) the representation type, (2) the strategies performed by users, and (3) the combination of both on recognition time and accuracy. We discuss the suitability of each representation for different settings and provide specific recommendations as to how user representations should be applied in multi-user scenarios. These recommendations guide practitioners and researchers in selecting the representation that optimizes the most for the deployment’s requirements, and for the user strategies that are feasible in that environment.

@InProceedings{khamis2018chi1, author = {Khamis, Mohamed and Becker, Christian and Bulling, Andreas and Alt, Florian}, booktitle = {{Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems}}, title = {{Which One is Me?: Identifying Oneself on Public Displays}}, year = {2018}, address = {New York, NY, USA}, note = {khamis2018chi1}, pages = {287:1--287:12}, publisher = {Association for Computing Machinery}, series = {CHI '18}, abstract = {While user representations are extensively used on public displays, it remains unclear how well users can recognize their own representation among those of surrounding users. We study the most widely used representations: abstract objects, skeletons, silhouettes and mirrors. In a prestudy (N=12), we identify five strategies that users follow to recognize themselves on public displays. In a second study (N=19), we quantify the users' recognition time and accuracy with respect to each representation type. Our findings suggest that there is a significant effect of (1) the representation type, (2) the strategies performed by users, and (3) the combination of both on recognition time and accuracy. We discuss the suitability of each representation for different settings and provide specific recommendations as to how user representations should be applied in multi-user scenarios. These recommendations guide practitioners and researchers in selecting the representation that optimizes the most for the deployment's requirements, and for the user strategies that are feasible in that environment.}, acmid = {3173861}, articleno = {287}, doi = {10.1145/3173574.3173861}, isbn = {978-1-4503-5620-6}, keywords = {multiple users, public displays, user representations}, location = {Montreal QC, Canada}, numpages = {12}, timestamp = {2018.05.01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/khamis2018chi1.pdf}, }

- Best Poster Award, AutoUI’18

M. Braun, F. Roider, F. Alt, and T. Gross. Automotive Research in the Public Space: Towards Deployment-Based Prototypes For Real Users. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’18), Association for Computing Machinery, New York, NY, USA, 2018, p. 181–185. doi:10.1145/3239092.3265964

[BibTeX] [Abstract] [Download PDF]Many automotive user studies allow users to experience and evaluate interactive concepts. They are however often limited to small and specific groups of participants, such as students or experts. This might limit the generalizability of results for future users. A possible solution is to allow a large group of unbiased users to actively experience an interactive prototype and generate new ideas, but there is little experience about the realization and benefits of such an approach. We placed an interactive prototype in a public space and gathered objective and subjective data from 693 participants over the course of three months. We found a high variance in data quality and identified resulting restrictions for suitable research questions. This results in concrete requirements to hardware, software, and analytics, e.g. the need for assessing data quality, and give examples how this approach lets users explore a system and give first-contact feedback which differentiates highly from common in-depth expert analyses.

@InProceedings{braun2018autouiadj2, author = {Braun, Michael and Roider, Florian and Alt, Florian and Gross, Tom}, booktitle = {{Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications}}, title = {{Automotive Research in the Public Space: Towards Deployment-Based Prototypes For Real Users}}, year = {2018}, address = {New York, NY, USA}, note = {braun2018autouiadj2}, pages = {181--185}, publisher = {Association for Computing Machinery}, series = {AutomotiveUI '18}, abstract = {Many automotive user studies allow users to experience and evaluate interactive concepts. They are however often limited to small and specific groups of participants, such as students or experts. This might limit the generalizability of results for future users. A possible solution is to allow a large group of unbiased users to actively experience an interactive prototype and generate new ideas, but there is little experience about the realization and benefits of such an approach. We placed an interactive prototype in a public space and gathered objective and subjective data from 693 participants over the course of three months. We found a high variance in data quality and identified resulting restrictions for suitable research questions. This results in concrete requirements to hardware, software, and analytics, e.g. the need for assessing data quality, and give examples how this approach lets users explore a system and give first-contact feedback which differentiates highly from common in-depth expert analyses.}, acmid = {3265964}, doi = {10.1145/3239092.3265964}, isbn = {978-1-4503-5947-4}, keywords = {Automotive UI, Deployment, Prototypes, User Studies}, location = {Toronto, ON, Canada}, numpages = {5}, timestamp = {2018.10.05}, url = {http://www.florian-alt.org/unibw/wp-content/publications/braun2018autouiadj2.pdf}, }

- Honorable Mention Award, MUM’17

M. Khamis, L. Bandelow, S. Schick, D. Casadevall, A. Bulling, and F. Alt. They Are All After You: Investigating the Viability of a Threat Model That Involves Multiple Shoulder Surfers. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia (MUM ’17), Association for Computing Machinery, New York, NY, USA, 2017, p. 31–35. doi:10.1145/3152832.3152851

[BibTeX] [Abstract] [Download PDF]Many of the authentication schemes for mobile devices that were proposed lately complicate shoulder surfing by splitting the attacker’s attention into two or more entities. For example, multimodal authentication schemes such as GazeTouchPIN and GazeTouchPass require attackers to observe the user’s gaze input and the touch input performed on the phone’s screen. These schemes have always been evaluated against single observers, while multiple observers could potentially attack these schemes with greater ease, since each of them can focus exclusively on one part of the password. In this work, we study the effectiveness of a novel threat model against authentication schemes that split the attacker’s attention. As a case study, we report on a security evaluation of two state of the art authentication schemes in the case of a team of two observers. Our results show that although multiple observers perform better against these schemes than single observers, multimodal schemes are significantly more secure against multiple observers compared to schemes that employ a single modality. We discuss how this threat model impacts the design of authentication schemes.

@InProceedings{khamis2017mum, author = {Khamis, Mohamed and Bandelow, Linda and Schick, Stina and Casadevall, Dario and Bulling, Andreas and Alt, Florian}, booktitle = {{Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia}}, title = {{They Are All After You: Investigating the Viability of a Threat Model That Involves Multiple Shoulder Surfers}}, year = {2017}, address = {New York, NY, USA}, note = {khamis2017mum}, pages = {31--35}, publisher = {Association for Computing Machinery}, series = {MUM '17}, abstract = {Many of the authentication schemes for mobile devices that were proposed lately complicate shoulder surfing by splitting the attacker's attention into two or more entities. For example, multimodal authentication schemes such as GazeTouchPIN and GazeTouchPass require attackers to observe the user's gaze input and the touch input performed on the phone's screen. These schemes have always been evaluated against single observers, while multiple observers could potentially attack these schemes with greater ease, since each of them can focus exclusively on one part of the password. In this work, we study the effectiveness of a novel threat model against authentication schemes that split the attacker's attention. As a case study, we report on a security evaluation of two state of the art authentication schemes in the case of a team of two observers. Our results show that although multiple observers perform better against these schemes than single observers, multimodal schemes are significantly more secure against multiple observers compared to schemes that employ a single modality. We discuss how this threat model impacts the design of authentication schemes.}, acmid = {3152851}, doi = {10.1145/3152832.3152851}, isbn = {978-1-4503-5378-6}, keywords = {gaze gestures, multimodal authentication, multiple observers, privacy, shoulder surfing, threat model}, location = {Stuttgart, Germany}, numpages = {5}, timestamp = {2017.11.26}, url = {http://www.florian-alt.org/unibw/wp-content/publications/khamis2017mum.pdf}, }

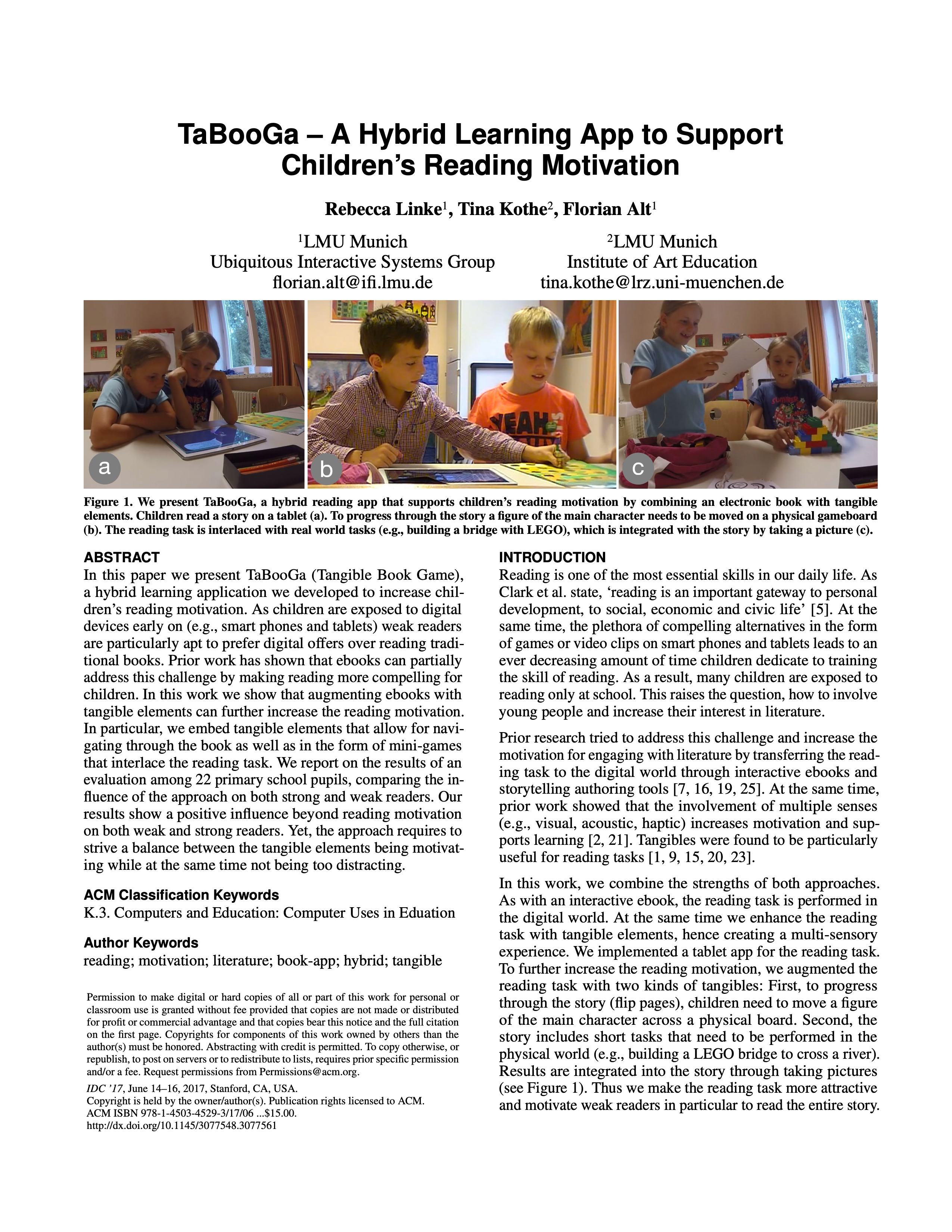

- Best Paper Award, IDC’17 R. Linke, T. Kothe, and F. Alt. TaBooGa: A Hybrid Learning App to Support Children’s Reading Motivation. In Proceedings of the 2017 Conference on Interaction Design and Children (IDC ’17), Association for Computing Machinery, New York, NY, USA, 2017, p. 278–285. doi:10.1145/3078072.3079712

[BibTeX] [Abstract] [Download PDF]In this paper we present TaBooGa (Tangible Book Game), a hybrid learning application we developed to increase children’s reading motivation. As children are exposed to digital devices early on (e.g., smart phones and tablets) weak readers are particularly apt to prefer digital offers over reading traditional books. Prior work has shown that ebooks can partially address this challenge by making reading more compelling for children. In this work we show that augmenting ebooks with tangible elements can further increase the reading motivation. In particular, we embed tangible elements that allow for navigating through the book as well as in the form of mini-games that interlace the reading task. We report on the results of an evaluation among 22 primary school pupils, comparing the influence of the approach on both strong and weak readers. Our results show a positive influence beyond reading motivation on both weak and strong readers. Yet, the approach requires to strive a balance between the tangible elements being motivating while at the same time not being too distracting.

@InProceedings{linke2017idc, author = {Linke, Rebecca and Kothe, Tina and Alt, Florian}, booktitle = {{Proceedings of the 2017 Conference on Interaction Design and Children}}, title = {{TaBooGa: A Hybrid Learning App to Support Children's Reading Motivation}}, year = {2017}, address = {New York, NY, USA}, note = {linke2017idc}, pages = {278--285}, publisher = {Association for Computing Machinery}, series = {IDC '17}, abstract = {In this paper we present TaBooGa (Tangible Book Game), a hybrid learning application we developed to increase children's reading motivation. As children are exposed to digital devices early on (e.g., smart phones and tablets) weak readers are particularly apt to prefer digital offers over reading traditional books. Prior work has shown that ebooks can partially address this challenge by making reading more compelling for children. In this work we show that augmenting ebooks with tangible elements can further increase the reading motivation. In particular, we embed tangible elements that allow for navigating through the book as well as in the form of mini-games that interlace the reading task. We report on the results of an evaluation among 22 primary school pupils, comparing the influence of the approach on both strong and weak readers. Our results show a positive influence beyond reading motivation on both weak and strong readers. Yet, the approach requires to strive a balance between the tangible elements being motivating while at the same time not being too distracting.}, acmid = {3079712}, doi = {10.1145/3078072.3079712}, isbn = {978-1-4503-4921-5}, keywords = {book-app, hybrid, literature, motivation, reading, tangible}, location = {Stanford, California, USA}, numpages = {8}, timestamp = {2017.05.02}, url = {http://www.florian-alt.org/unibw/wp-content/publications/linke2017idc.pdf}, }

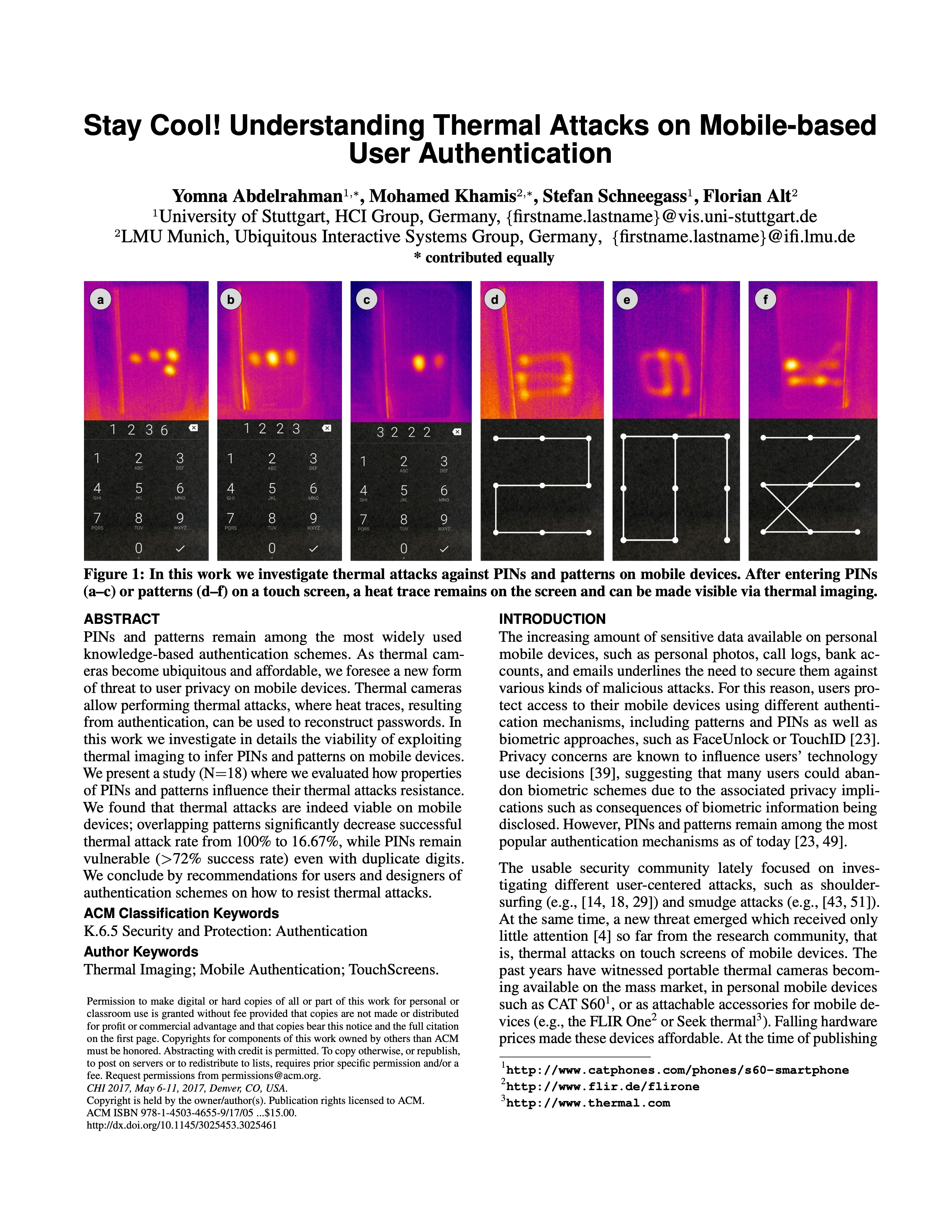

- Best of CHI Honorable Mention Award, CHI’17 Y. Abdelrahman, M. Khamis, S. Schneegass, and F. Alt. Stay Cool! Understanding Thermal Attacks on Mobile-based User Authentication. In Proceedings of the 35th Annual ACM Conference on Human Factors in Computing Systems (CHI ’17), Association for Computing Machinery, New York, NY, USA, 2017. doi:10.1145/3025453.3025461

[BibTeX] [Abstract] [Download PDF]PINs and patterns remain among the most widely used knowledge-based authentication schemes. As thermal cameras become ubiquitous and affordable, we foresee a new form of threat to user privacy on mobile devices. Thermal cameras allow performing thermal attacks, where heat traces, resulting from authentication, can be used to reconstruct passwords. In this work we investigate in details the viability of exploiting thermal imaging to infer PINs and patterns on mobile devices. We present a study (N=18) where we evaluated how properties of PINs and patterns influence their thermal attacks resistance. We found that thermal attacks are indeed viable on mobile devices; overlapping patterns significantly decrease successful thermal attack rate from 100% to 16.67%, while PINs remain vulnerable (>72% success rate) even with duplicate digits. We conclude by recommendations for users and designers of authentication schemes on how to resist thermal attacks.

@InProceedings{abdelrahman2017chi, author = {Abdelrahman, Yomna and Khamis, Mohamed and Schneegass, Stefan and Alt, Florian}, booktitle = {{Proceedings of the 35th Annual ACM Conference on Human Factors in Computing Systems}}, title = {{Stay Cool! Understanding Thermal Attacks on Mobile-based User Authentication}}, year = {2017}, address = {New York, NY, USA}, note = {abdelrahman2017chi}, publisher = {Association for Computing Machinery}, series = {CHI '17}, abstract = {PINs and patterns remain among the most widely used knowledge-based authentication schemes. As thermal cameras become ubiquitous and affordable, we foresee a new form of threat to user privacy on mobile devices. Thermal cameras allow performing thermal attacks, where heat traces, resulting from authentication, can be used to reconstruct passwords. In this work we investigate in details the viability of exploiting thermal imaging to infer PINs and patterns on mobile devices. We present a study (N=18) where we evaluated how properties of PINs and patterns influence their thermal attacks resistance. We found that thermal attacks are indeed viable on mobile devices; overlapping patterns significantly decrease successful thermal attack rate from 100% to 16.67%, while PINs remain vulnerable (>72% success rate) even with duplicate digits. We conclude by recommendations for users and designers of authentication schemes on how to resist thermal attacks.}, doi = {10.1145/3025453.3025461}, location = {Denver, CO, USA}, timestamp = {2017.05.12}, url = {http://www.florian-alt.org/unibw/wp-content/publications/abdelrahman2017chi.pdf}, video = {abdelrahman2017chi}, }

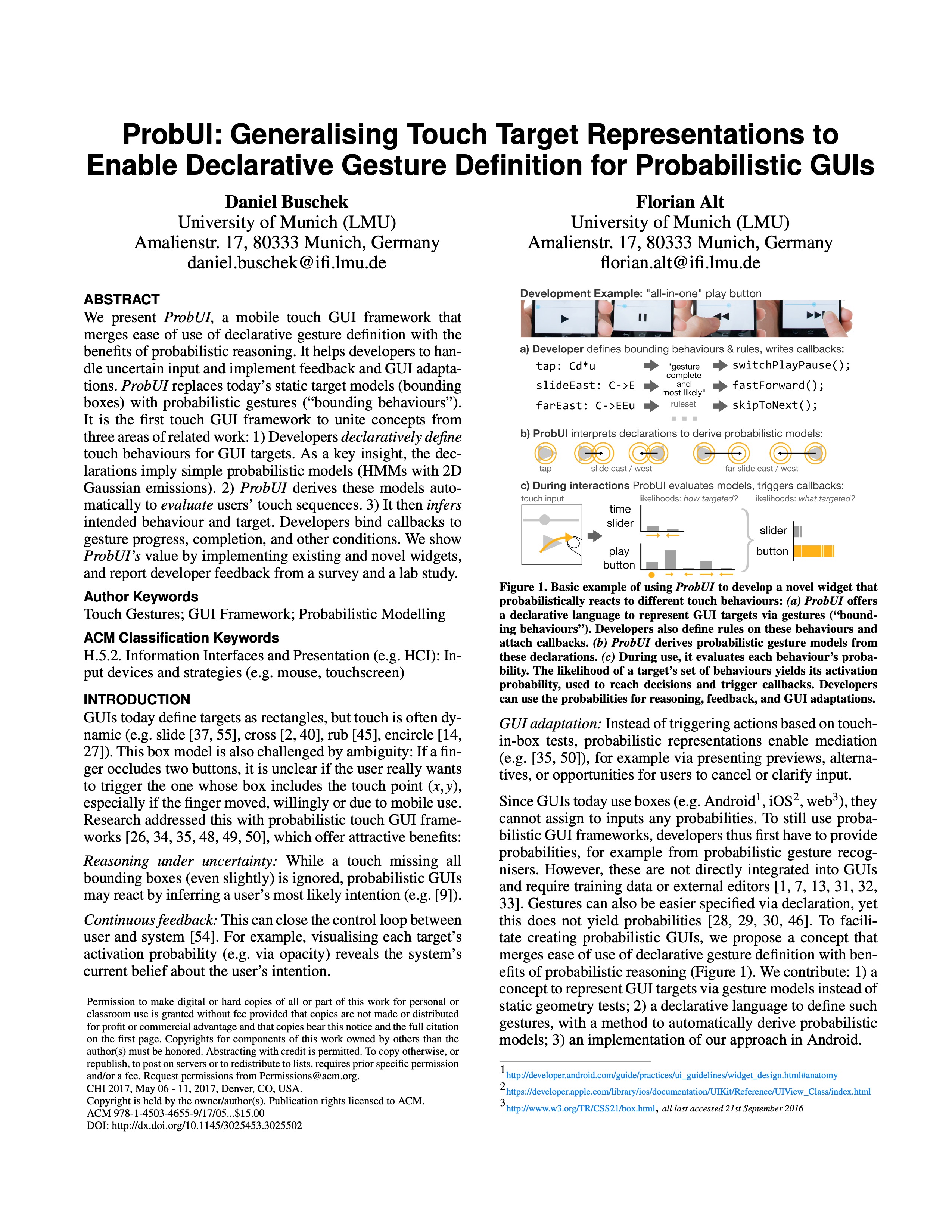

- Best of CHI Honorable Mention Award, CHI’17 D. Buschek and F. Alt. ProbUI: Generalising Touch Target Representations to Enable Declarative Gesture Definition for Probabilistic GUIs. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), Association for Computing Machinery, New York, NY, USA, 2017, p. 4640–4653. doi:10.1145/3025453.3025502

[BibTeX] [Abstract] [Download PDF]We present ProbUI, a mobile touch GUI framework that merges ease of use of declarative gesture definition with the benefits of probabilistic reasoning. It helps developers to handle uncertain input and implement feedback and GUI adaptations. ProbUI replaces today’s static target models (bounding boxes) with probabilistic gestures (“bounding behaviours”). It is the first touch GUI framework to unite concepts from three areas of related work: 1) Developers declaratively define touch behaviours for GUI targets. As a key insight, the declarations imply simple probabilistic models (HMMs with 2D Gaussian emissions). 2) ProbUI derives these models automatically to evaluate users’ touch sequences. 3) It then infers intended behaviour and target. Developers bind callbacks to gesture progress, completion, and other conditions. We show ProbUI’s value by implementing existing and novel widgets, and report developer feedback from a survey and a lab study.

@InProceedings{buschek2017chi, author = {Buschek, Daniel and Alt, Florian}, booktitle = {{Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems}}, title = {{ProbUI: Generalising Touch Target Representations to Enable Declarative Gesture Definition for Probabilistic GUIs}}, year = {2017}, address = {New York, NY, USA}, note = {buschek2017chi}, pages = {4640--4653}, publisher = {Association for Computing Machinery}, series = {CHI '17}, abstract = {We present ProbUI, a mobile touch GUI framework that merges ease of use of declarative gesture definition with the benefits of probabilistic reasoning. It helps developers to handle uncertain input and implement feedback and GUI adaptations. ProbUI replaces today's static target models (bounding boxes) with probabilistic gestures ("bounding behaviours"). It is the first touch GUI framework to unite concepts from three areas of related work: 1) Developers declaratively define touch behaviours for GUI targets. As a key insight, the declarations imply simple probabilistic models (HMMs with 2D Gaussian emissions). 2) ProbUI derives these models automatically to evaluate users' touch sequences. 3) It then infers intended behaviour and target. Developers bind callbacks to gesture progress, completion, and other conditions. We show ProbUI's value by implementing existing and novel widgets, and report developer feedback from a survey and a lab study.}, acmid = {3025502}, doi = {10.1145/3025453.3025502}, isbn = {978-1-4503-4655-9}, keywords = {gui framework, probabilistic modelling, touch gestures}, location = {Denver, Colorado, USA}, numpages = {14}, timestamp = {2017.05.10}, url = {http://www.florian-alt.org/unibw/wp-content/publications/buschek2017chi.pdf}, }

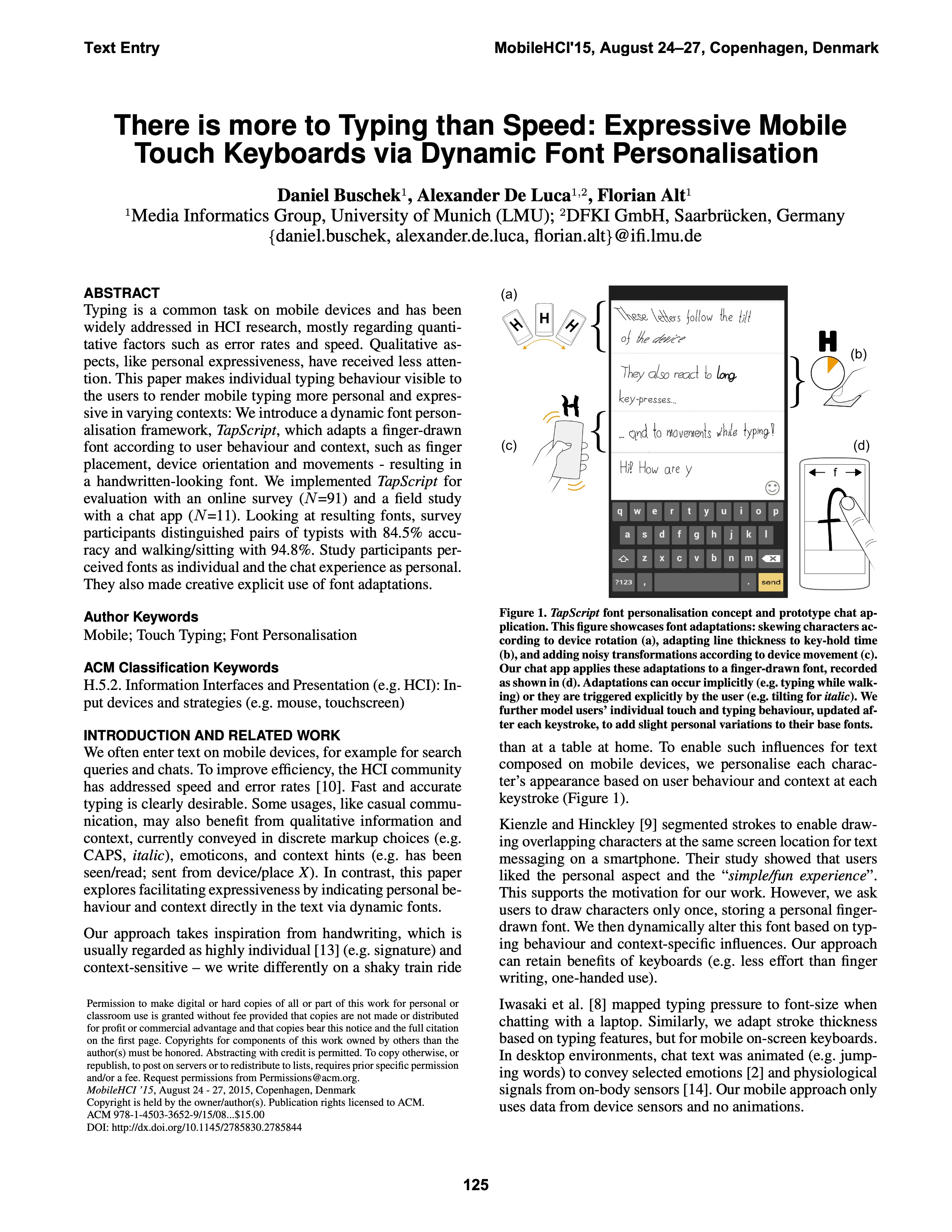

- Honorable Mention Award, MobileHCI’15 D. Buschek, A. De Luca, and F. Alt. There is More to Typing Than Speed: Expressive Mobile Touch Keyboards via Dynamic Font Personalisation. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’15), Association for Computing Machinery, New York, NY, USA, 2015, p. 125–130. doi:10.1145/2785830.2785844

[BibTeX] [Abstract] [Download PDF]Typing is a common task on mobile devices and has been widely addressed in HCI research, mostly regarding quantitative factors such as error rates and speed. Qualitative aspects, like personal expressiveness, have received less attention. This paper makes individual typing behaviour visible to the users to render mobile typing more personal and expressive in varying contexts: We introduce a dynamic font personalisation framework, TapScript, which adapts a finger-drawn font according to user behaviour and context, such as finger placement, device orientation and movements – resulting in a handwritten-looking font. We implemented TapScript for evaluation with an online survey (N=91) and a field study with a chat app (N=11). Looking at resulting fonts, survey participants distinguished pairs of typists with 84.5% accuracy and walking/sitting with 94.8%. Study participants perceived fonts as individual and the chat experience as personal. They also made creative explicit use of font adaptations.

@InProceedings{buschek2015mobilehci, author = {Buschek, Daniel and De Luca, Alexander and Alt, Florian}, booktitle = {{Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services}}, title = {{There is More to Typing Than Speed: Expressive Mobile Touch Keyboards via Dynamic Font Personalisation}}, year = {2015}, address = {New York, NY, USA}, note = {buschek2015mobilehci}, pages = {125--130}, publisher = {Association for Computing Machinery}, series = {MobileHCI '15}, abstract = {Typing is a common task on mobile devices and has been widely addressed in HCI research, mostly regarding quantitative factors such as error rates and speed. Qualitative aspects, like personal expressiveness, have received less attention. This paper makes individual typing behaviour visible to the users to render mobile typing more personal and expressive in varying contexts: We introduce a dynamic font personalisation framework, TapScript, which adapts a finger-drawn font according to user behaviour and context, such as finger placement, device orientation and movements - resulting in a handwritten-looking font. We implemented TapScript for evaluation with an online survey (N=91) and a field study with a chat app (N=11). Looking at resulting fonts, survey participants distinguished pairs of typists with 84.5% accuracy and walking/sitting with 94.8%. Study participants perceived fonts as individual and the chat experience as personal. They also made creative explicit use of font adaptations.}, acmid = {2785844}, doi = {10.1145/2785830.2785844}, isbn = {978-1-4503-3652-9}, keywords = {Font Personalisation, Mobile, Touch Typing}, location = {Copenhagen, Denmark}, numpages = {6}, timestamp = {2015.08.23}, url = {http://www.florian-alt.org/unibw/wp-content/publications/buschek2015mobilehci.pdf}, }

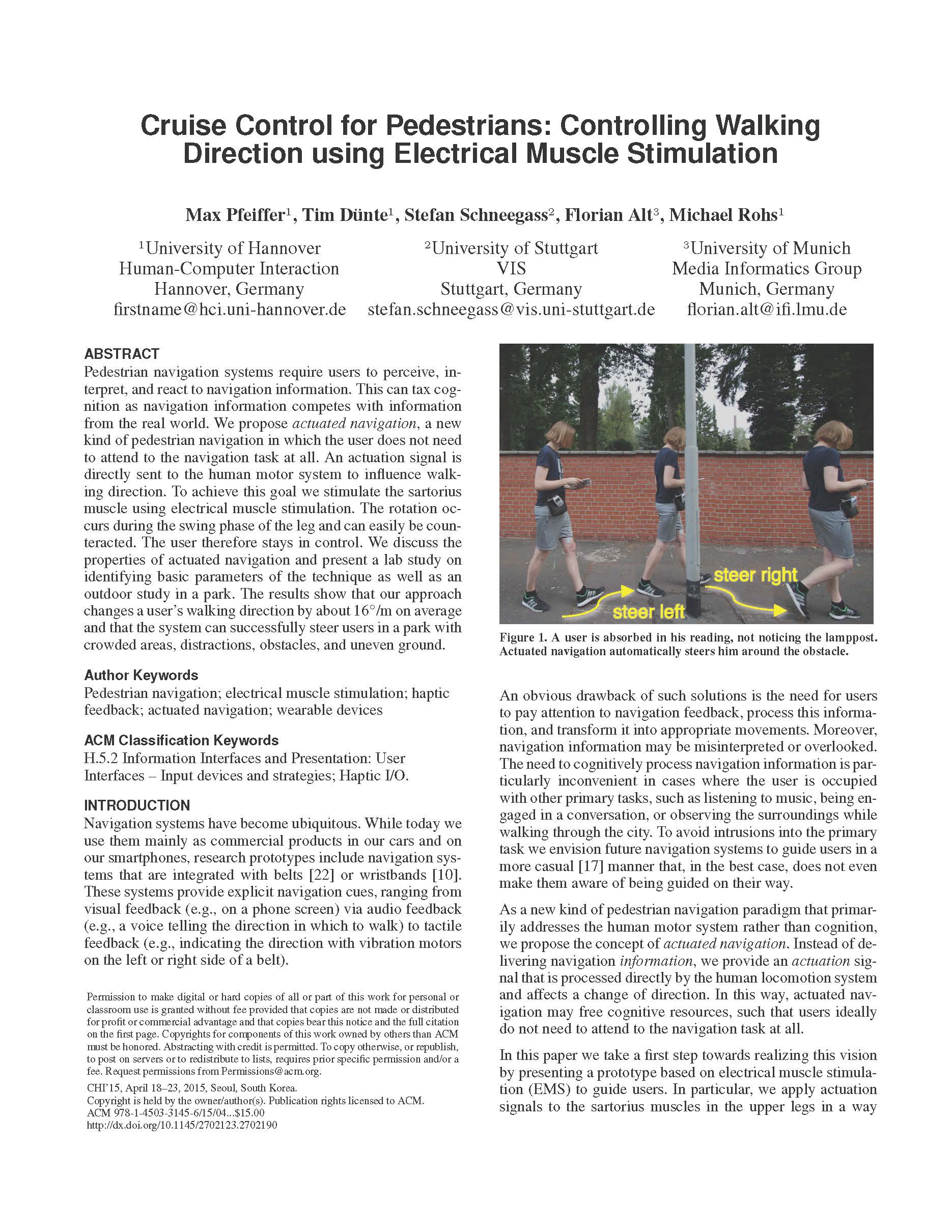

- Best of CHI Honorable Mention Award, CHI’15 M. Pfeiffer, T. Dünte, S. Schneegass, F. Alt, and M. Rohs. Cruise Control for Pedestrians: Controlling Walking Direction Using Electrical Muscle Stimulation. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15), Association for Computing Machinery, New York, NY, USA, 2015, p. 2505–2514. doi:10.1145/2702123.2702190

[BibTeX] [Abstract] [Download PDF]Pedestrian navigation systems require users to perceive, interpret, and react to navigation information. This can tax cognition as navigation information competes with information from the real world. We propose actuated navigation, a new kind of pedestrian navigation in which the user does not need to attend to the navigation task at all. An actuation signal is directly sent to the human motor system to influence walking direction. To achieve this goal we stimulate the sartorius muscle using electrical muscle stimulation. The rotation occurs during the swing phase of the leg and can easily be counteracted. The user therefore stays in control. We discuss the properties of actuated navigation and present a lab study on identifying basic parameters of the technique as well as an outdoor study in a park. The results show that our approach changes a user’s walking direction by about 16°/m on average and that the system can successfully steer users in a park with crowded areas, distractions, obstacles, and uneven ground.

@InProceedings{pfeiffer2015chi, author = {Pfeiffer, Max and D\"{u}nte, Tim and Schneegass, Stefan and Alt, Florian and Rohs, Michael}, booktitle = {{Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems}}, title = {{Cruise Control for Pedestrians: Controlling Walking Direction Using Electrical Muscle Stimulation}}, year = {2015}, address = {New York, NY, USA}, note = {pfeiffer2015chi}, pages = {2505--2514}, publisher = {Association for Computing Machinery}, series = {CHI '15}, abstract = {Pedestrian navigation systems require users to perceive, interpret, and react to navigation information. This can tax cognition as navigation information competes with information from the real world. We propose actuated navigation, a new kind of pedestrian navigation in which the user does not need to attend to the navigation task at all. An actuation signal is directly sent to the human motor system to influence walking direction. To achieve this goal we stimulate the sartorius muscle using electrical muscle stimulation. The rotation occurs during the swing phase of the leg and can easily be counteracted. The user therefore stays in control. We discuss the properties of actuated navigation and present a lab study on identifying basic parameters of the technique as well as an outdoor study in a park. The results show that our approach changes a user's walking direction by about 16°/m on average and that the system can successfully steer users in a park with crowded areas, distractions, obstacles, and uneven ground.}, acmid = {2702190}, doi = {10.1145/2702123.2702190}, isbn = {978-1-4503-3145-6}, keywords = {actuated navigation, electrical muscle stimulation, haptic feedback, pedestrian navigation, wearable devices}, location = {Seoul, Republic of Korea}, numpages = {10}, timestamp = {2015.04.28}, url = {http://www.florian-alt.org/unibw/wp-content/publications/pfeiffer2015chi.pdf}, }

- Best Paper Award, CHI’12 J. Müller, R. Walter, G. Bailly, M. Nischt, and F. Alt. Looking Glass: A Field Study on Noticing Interactivity of a Shop Window. In Proceedings of the 2012 Association for Computing Machinery Conference on Human Factors in Computing Systems (CHI’12), Association for Computing Machinery, New York, NY, USA, 2012, p. 297–306. doi:10.1145/2207676.2207718

[BibTeX] [Abstract] [Download PDF]In this paper we present our findings from a lab and a field study investigating how passers-by notice the interactivity of public displays. We designed an interactive installation that uses visual feedback to the incidental movements of passers-by to communicate its interactivity. The lab study reveals: (1) Mirrored user silhouettes and images are more effective than avatar-like representations. (2) It takes time to notice the interactivity (approx. 1.2s). In the field study, three displays were installed during three weeks in shop windows, and data about 502 interaction sessions were collected. Our observations show: (1) Significantly more passers-by interact when immediately showing the mirrored user image (+90%) or silhouette (+47%) compared to a traditional attract sequence with call-to-action. (2) Passers-by often notice interactivity late and have to walk back to interact (the landing effect). (3) If somebody is already interacting, others begin interaction behind the ones already interacting, forming multiple rows (the honeypot effect). Our findings can be used to design public display applications and shop windows that more effectively communicate interactivity to passers-by.

@InProceedings{mueller2012chi, author = {M\"{u}ller, J\"{o}rg and Walter, Robert and Bailly, Gilles and Nischt, Michael and Alt, Florian}, booktitle = {{Proceedings of the 2012 Association for Computing Machinery Conference on Human Factors in Computing Systems}}, title = {{Looking Glass: A Field Study on Noticing Interactivity of a Shop Window}}, year = {2012}, address = {New York, NY, USA}, month = {apr}, note = {mueller2012chi}, pages = {297--306}, publisher = {Association for Computing Machinery}, series = {CHI'12}, abstract = {In this paper we present our findings from a lab and a field study investigating how passers-by notice the interactivity of public displays. We designed an interactive installation that uses visual feedback to the incidental movements of passers-by to communicate its interactivity. The lab study reveals: (1) Mirrored user silhouettes and images are more effective than avatar-like representations. (2) It takes time to notice the interactivity (approx. 1.2s). In the field study, three displays were installed during three weeks in shop windows, and data about 502 interaction sessions were collected. Our observations show: (1) Significantly more passers-by interact when immediately showing the mirrored user image (+90%) or silhouette (+47%) compared to a traditional attract sequence with call-to-action. (2) Passers-by often notice interactivity late and have to walk back to interact (the landing effect). (3) If somebody is already interacting, others begin interaction behind the ones already interacting, forming multiple rows (the honeypot effect). Our findings can be used to design public display applications and shop windows that more effectively communicate interactivity to passers-by.}, acmid = {2207718}, doi = {10.1145/2207676.2207718}, isbn = {978-1-4503-1015-4}, keywords = {interactivity, noticing interactivity, public displays, User representation}, location = {Austin, Texas, USA}, numpages = {10}, owner = {flo}, timestamp = {2012.05.01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/mueller2012chi.pdf}, }

- Best Paper Award, AMI’09 F. Alt, M. Balz, S. Kristes, A. S. Shirazi, J. Mennenöh, A. Schmidt, H. Schröder, and M. Gödicke. Adaptive User Profiles in Pervasive Advertising Environments. In Proceedings of the European Conference on Ambient Intelligence (AmI’09), Springer-Verlag, Berlin, Heidelberg, 2009, p. 276–286. doi:10.1007/978-3-642-05408-2_32

[BibTeX] [Abstract] [Download PDF]Nowadays modern advertising environments try to provide more efficient ads by targeting costumers based on their interests. Various approaches exist today as to how information about the users’ interests can be gathered. Users can deliberately and explicitly provide this information or user’s shopping behaviors can be analyzed implicitly. We implemented an advertising platform to simulate an advertising environment and present adaptive profiles, which let users setup profiles based on a self-assessment, and enhance those profiles with information about their real shopping behavior as well as about their activity intensity. Additionally, we explain how pervasive technologies such as Bluetooth can be used to create a profile anonymously and unobtrusively.

@InProceedings{alt2009ami, author = {Alt, Florian and Balz, Moritz and Kristes, Stefanie and Shirazi, Alireza Sahami and Mennen\"{o}h, Julian and Schmidt, Albrecht and Schr\"{o}der, Hendrik and G\"{o}dicke, Michael}, booktitle = {{Proceedings of the European Conference on Ambient Intelligence}}, title = {{Adaptive User Profiles in Pervasive Advertising Environments}}, year = {2009}, address = {Berlin, Heidelberg}, month = {nov}, note = {alt2009ami}, pages = {276--286}, publisher = {Springer-Verlag}, series = {AmI'09}, abstract = {Nowadays modern advertising environments try to provide more efficient ads by targeting costumers based on their interests. Various approaches exist today as to how information about the users’ interests can be gathered. Users can deliberately and explicitly provide this information or user’s shopping behaviors can be analyzed implicitly. We implemented an advertising platform to simulate an advertising environment and present adaptive profiles, which let users setup profiles based on a self-assessment, and enhance those profiles with information about their real shopping behavior as well as about their activity intensity. Additionally, we explain how pervasive technologies such as Bluetooth can be used to create a profile anonymously and unobtrusively.}, acmid = {1694666}, doi = {10.1007/978-3-642-05408-2_32}, isbn = {978-3-642-05407-5}, location = {Salzburg, Austria}, numpages = {11}, timestamp = {2009.11.01}, url = {http://www.florian-alt.org/unibw/wp-content/publications/alt2009ami.pdf}, }

Reviews

- CHI 2023 Papers Special Recognition for exceptional reviews

- CHI 2022 Special Recognition for exceptional reviews (3)

- IMWUT 2022 May Special Recognition for exceptional reviews

- IMWUT 2020 May Special Recognition for exceptional reviews

- UIST 2020 Special Recognition for exceptional reviews

- IMWUT 2020 Special Recognition for exceptional reviews

- CHI 2019 Special Recognition for exceptional reviews (2)

- CHI 2016 Special Recognition for exceptional reviews

- CHI 2015 Special Recognition for exceptional reviews

- CHI 2014 Special Recognition for exceptional reviews