2025

S. Mansour, V. Winterhalter, F. Alt, and V. Paneva. A Multi-Layered Privacy Permission Frameworkfor Extended Reality. In Proceedings of the New Security Paradigms Workshop (NSPW ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

Extended Reality (XR) systems bring arrays of sensors closer to the user’s body, enabling the collection of extensive user and contextual data, from motion and biometrics to behavioral analytics, that users might not be aware they are sharing. This poses significant risks to users’ privacy. Yet, despite the immersive and dynamic nature of XR, most platforms still rely on static, text-based privacy mechanisms inherited from traditional 2D interfaces. We \new{propose a new paradigm of \textit{continuous consent in XR}, where privacy decisions unfold as a relational, context-aware, and renegotiable process embedded in the experience} – not a single consent event. To this end, we propose a \emph{Multi-Layered Privacy Framework} spanning five interdependent layers: regulatory compliance, technical implementation, permission models, user experience, and user perception and cognition. We then introduce the \emph{User Privacy Journey Model}, which operationalizes the framework as a sequential user pathway: from onboarding and contextual prompts to in-experience control and post-session review, along with the \emph{XR Privacy Checklist} to support practical adoption. By rethinking consent as a continuous journey, we present a new paradigm for XR privacy, one that opens a new research perspective on what “informed” consent means in immersive environments where the boundaries between self, system, and space are increasingly blurred.

@InProceedings{mansour2025nspw,

author = {Mansour, Shady AND Winterhalter, Verena AND Alt, Florian AND Paneva, Viktorija},

booktitle = {{Proceedings of the New Security Paradigms Workshop}},

title = {{A Multi-Layered Privacy Permission Frameworkfor Extended Reality}},

year = {2025},

address = {New York, NY, USA},

note = {mansour2025nspw},

publisher = {Association for Computing Machinery},

series = {NSPW '25},

abstract = {Extended Reality (XR) systems bring arrays of sensors closer to the user's body, enabling the collection of extensive user and contextual data, from motion and biometrics to behavioral analytics, that users might not be aware they are sharing. This poses significant risks to users' privacy. Yet, despite the immersive and dynamic nature of XR, most platforms still rely on static, text-based privacy mechanisms inherited from traditional 2D interfaces. We \new{propose a new paradigm of \textit{continuous consent in XR}, where privacy decisions unfold as a relational, context-aware, and renegotiable process embedded in the experience} -- not a single consent event. To this end, we propose a \emph{Multi-Layered Privacy Framework} spanning five interdependent layers: regulatory compliance, technical implementation, permission models, user experience, and user perception and cognition. We then introduce the \emph{User Privacy Journey Model}, which operationalizes the framework as a sequential user pathway: from onboarding and contextual prompts to in-experience control and post-session review, along with the \emph{XR Privacy Checklist} to support practical adoption. By rethinking consent as a continuous journey, we present a new paradigm for XR privacy, one that opens a new research perspective on what "informed" consent means in immersive environments where the boundaries between self, system, and space are increasingly blurred.},

keywords = {Security User Interfaces, Privacy Awareness, Public Displays, Behaviour Change},

timestamp = {2026.01.01},

url = {http://www.florian-alt.org/unibw/wp-content/publications/mansour2025nspw.pdf},

} F. Dietz, P. Heubl, L. Haliburton, D. Bothe, J. Hörnemann, A. Sasse, and F. Alt. A Platform for Physiological and Behavioral Security. In Proceedings of the New Security Paradigms Workshop (NSPW ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

Human-centered security research traditionally leverages self-reports and high-level behavioral data. However, the increasing ubiquity of sensors integrated into personal, wearable devices (e.g., smartphones, smartwatches) and in users’ environments (e.g., cameras) enables researchers to unobtrusively collect rich physiological and behavioral signals. These real-time data streams can reveal user states—such as attention or workload—that can be employed to design adaptive security mechanisms. In this paper, we present a platform that supports designing, building, and evaluating next-generation user interfaces that leverage physiological and behavioral data for enhanced security. First, we introduce the physio-behavioral security paradigm, highlighting how sensor-based insights into user states can inform individualized security interventions and accurately identify moments of vulnerability. We then outline the requirements, system architecture, and implementation details of the platform, illustrating how multiple data streams (e.g., gaze, heart rate, keystrokes, mouse movements) are integrated and securely processed. Finally, we report on an exploratory deployment in a mid-sized organization, showcasing how the tool captures real-time security behaviors and enables context-aware interventions. The deployment yields insights into factors influencing acceptance across different stakeholders (management, IT department, employees). Our results suggest that adaptive approaches, informed by physiological and behavioral signals, can improve security outcomes and user acceptance.

@InProceedings{mansour2025nspw,

author = {Dietz, Felix AND Heubl, Peter AND Haliburton, Luke AND Bothe, David AND Hörnemann, Jan AND Sasse, Angela AND Alt, Florian},

booktitle = {{Proceedings of the New Security Paradigms Workshop}},

title = {{A Platform for Physiological and Behavioral Security}},

year = {2025},

address = {New York, NY, USA},

note = {dietz2025nspw},

publisher = {Association for Computing Machinery},

series = {NSPW '25},

abstract = {Human-centered security research traditionally leverages self-reports and high-level behavioral data. However, the increasing ubiquity of sensors integrated into personal, wearable devices (e.g., smartphones, smartwatches) and in users’ environments (e.g., cameras) enables researchers to unobtrusively collect rich physiological and behavioral signals. These real-time data streams can reveal user states—such as attention or workload—that can be employed to design adaptive security mechanisms. In this paper, we present a platform that supports designing, building, and evaluating next-generation user interfaces that leverage physiological and behavioral data for enhanced security. First, we introduce the physio-behavioral security paradigm, highlighting how sensor-based insights into user states can inform individualized security interventions and accurately identify moments of vulnerability. We then outline the requirements, system architecture, and implementation details of the platform, illustrating how multiple data streams (e.g., gaze, heart rate, keystrokes, mouse movements) are integrated and securely processed. Finally, we report on an exploratory deployment in a mid-sized organization, showcasing how the tool captures real-time security behaviors and enables context-aware interventions. The deployment yields insights into factors influencing acceptance across different stakeholders (management, IT department, employees). Our results suggest that adaptive approaches, informed by physiological and behavioral signals, can improve security outcomes and user acceptance.},

keywords = {Security User Interfaces, Privacy Awareness, Public Displays, Behaviour Change},

timestamp = {2026.01.01},

url = {http://www.florian-alt.org/unibw/wp-content/publications/dietz2025nspw.pdf},

} D. Murtezaj, J. Dinic, V. Paneva, and F. Alt. Roby on a Mission: Promoting Awareness and Adoption of Password Managers Through Public Security User Interfaces. In Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia (MUM ’25), Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3626705.3627770

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{murtezaj2025mum,

author = {Doruntina Murtezaj AND Jovana Dinic AND Viktorija Paneva and Alt, Florian},

booktitle = {{Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia}},

title = {{Roby on a Mission: Promoting Awareness and Adoption of Password Managers Through Public Security User Interfaces}},

year = {2025},

address = {New York, NY, USA},

note = {murtezaj2025mum},

publisher = {Association for Computing Machinery},

series = {MUM '25},

doi = {10.1145/3626705.3627770},

keywords = {metaphors, privacy, security, symbols},

location = {Enna, Italy},

timestamp = {2025.12.25},

url = {http://www.florian-alt.org/unibw/wp-content/publications/murtezaj2025mum.pdf},

} O. Hein, S. Wackerl, C. Ou, F. Alt, and F. Chiossi. At the speed of the heart: evaluating physiologically-adaptive visualizations for supporting engagement in biking exergaming in virtual reality. In Proceedings of the first annual conference on human-computer interaction and sports (SportsHCI ’25), Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3749385.3749398

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

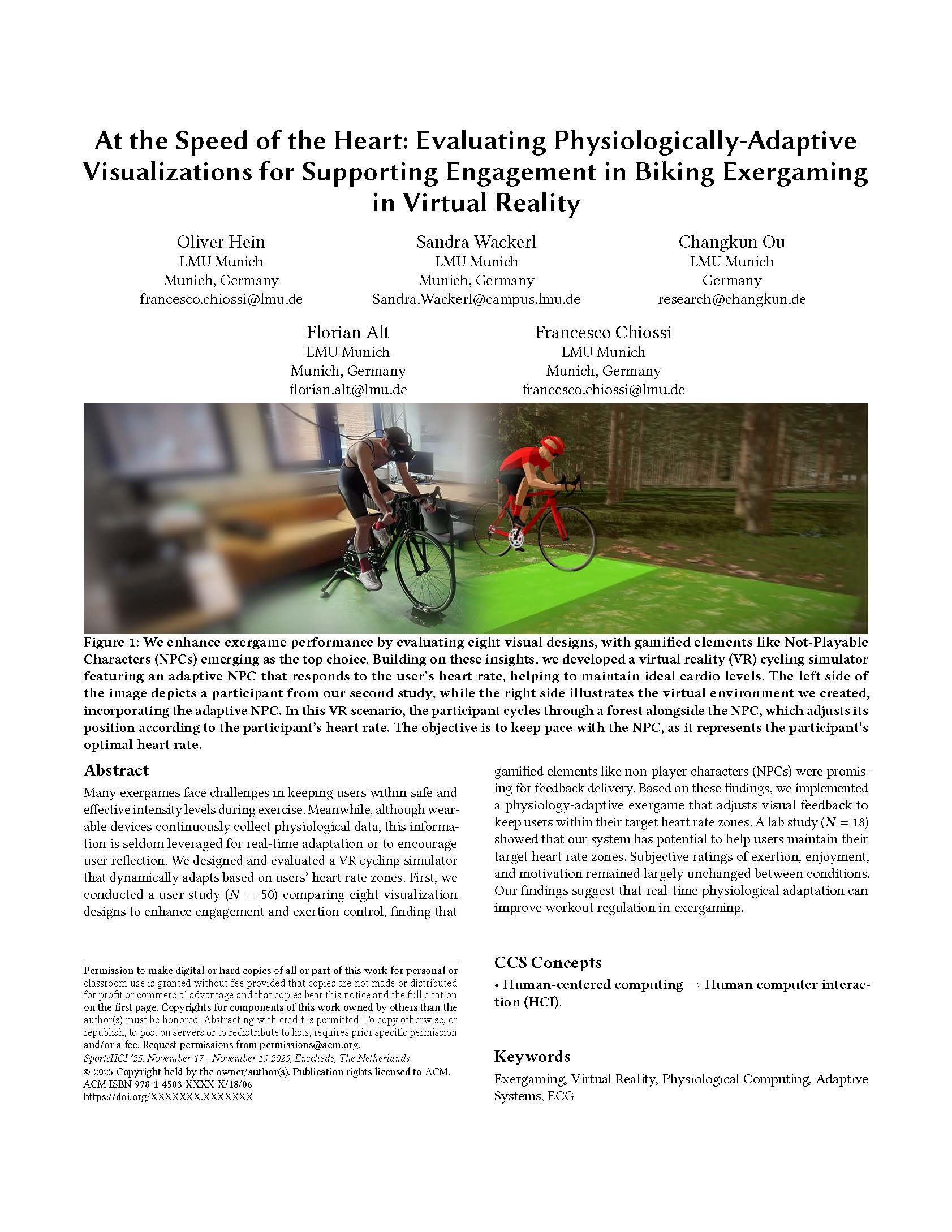

Many exergames face challenges in keeping users within safe and effective intensity levels during exercise. Meanwhile, although wearable devices continuously collect physiological data, this information is seldom leveraged for real-time adaptation or to encourage user reflection. We designed and evaluated a VR cycling simulator that dynamically adapts based on users’ heart rate zones. First, we conducted a user study (N = 50) comparing eight visualization designs to enhance engagement and exertion control, finding that gamified elements like non-player characters (NPCs) were promising for feedback delivery. Based on these findings, we implemented a physiology-adaptive exergame that adjusts visual feedback to keep users within their target heart rate zones. A lab study (N = 18) showed that our system has potential to help users maintain their target heart rate zones. Subjective ratings of exertion, enjoyment, and motivation remained largely unchanged between conditions. Our findings suggest that real-time physiological adaptation through NPC visualizations can improve workout regulation in exergaming.

@InProceedings{hein2025sportshci,

author = {Hein, Oliver and Wackerl, Sandra and Ou, Changkun and Alt, Florian and Chiossi, Francesco},

booktitle = {Proceedings of the First Annual Conference on Human-Computer Interaction and Sports},

title = {At the Speed of the Heart: Evaluating Physiologically-Adaptive Visualizations for Supporting Engagement in Biking Exergaming in Virtual Reality},

year = {2025},

address = {New York, NY, USA},

note = {hein2025sportshci},

publisher = {Association for Computing Machinery},

series = {SportsHCI '25},

abstract = {Many exergames face challenges in keeping users within safe and effective intensity levels during exercise. Meanwhile, although wearable devices continuously collect physiological data, this information is seldom leveraged for real-time adaptation or to encourage user reflection. We designed and evaluated a VR cycling simulator that dynamically adapts based on users’ heart rate zones. First, we conducted a user study (N = 50) comparing eight visualization designs to enhance engagement and exertion control, finding that gamified elements like non-player characters (NPCs) were promising for feedback delivery. Based on these findings, we implemented a physiology-adaptive exergame that adjusts visual feedback to keep users within their target heart rate zones. A lab study (N = 18) showed that our system has potential to help users maintain their target heart rate zones. Subjective ratings of exertion, enjoyment, and motivation remained largely unchanged between conditions. Our findings suggest that real-time physiological adaptation through NPC visualizations can improve workout regulation in exergaming.},

articleno = {15},

doi = {10.1145/3749385.3749398},

isbn = {9798400714283},

keywords = {Exergaming, Virtual Reality, Physiological Computing, Adaptive Systems, ECG},

numpages = {18},

timestamp = {2025.11.20},

url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025sportshci.pdf},

} V. Paneva, V. Winterhalter, F. Augustinowski, and F. Alt. User Understanding of Privacy Permissions in Mobile Augmented Reality: Perceptions and Misconceptions. Proc. ACM Hum.-Comput. Interact., vol. 9, iss. 5, 2025. doi:10.1145/3743738

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

Mobile Augmented Reality (AR) applications leverage various sensors to provide immersive user experiences. However, their reliance on diverse data sources introduces significant privacy challenges. This paper investigates user perceptions and understanding of privacy permissions in mobile AR apps through an analysis of existing applications and an online survey of 120 participants. Findings reveal common misconceptions, including confusion about how permissions relate to specific AR functionalities (e.g., location and measurement of physical distances), and misinterpretations of permission labels (e.g., conflating camera and gallery access). We identify a set of actionable implications for designing more usable and transparent privacy mechanisms tailored to mobile AR technologies, including contextual explanations, modular permission requests, and clearer permission labels. These findings offer actionable guidance for developers, researchers, and policymakers working to enhance privacy frameworks in mobile AR.

@Article{paneva2025mobilehci,

author = {Paneva, Viktorija and Winterhalter, Verena and Augustinowski, Franziska and Alt, Florian},

journal = {{Proc. ACM Hum.-Comput. Interact.}},

title = {{User Understanding of Privacy Permissions in Mobile Augmented Reality: Perceptions and Misconceptions}},

year = {2025},

month = sep,

note = {paneva2025mobilehci},

number = {5},

volume = {9},

abstract = {Mobile Augmented Reality (AR) applications leverage various sensors to provide immersive user experiences. However, their reliance on diverse data sources introduces significant privacy challenges. This paper investigates user perceptions and understanding of privacy permissions in mobile AR apps through an analysis of existing applications and an online survey of 120 participants. Findings reveal common misconceptions, including confusion about how permissions relate to specific AR functionalities (e.g., location and measurement of physical distances), and misinterpretations of permission labels (e.g., conflating camera and gallery access). We identify a set of actionable implications for designing more usable and transparent privacy mechanisms tailored to mobile AR technologies, including contextual explanations, modular permission requests, and clearer permission labels. These findings offer actionable guidance for developers, researchers, and policymakers working to enhance privacy frameworks in mobile AR.},

address = {New York, NY, USA},

articleno = {MHCI037},

doi = {10.1145/3743738},

issue_date = {September 2025},

keywords = {Usable Privacy, Privacy Permissions, Augmented Reality, Mobile Interaction, Smartphones},

numpages = {17},

publisher = {Association for Computing Machinery},

timestamp = {2025.09.23},

url = {https://www.unibw.de/usable-security-and-privacy/publikationen/pdf/paneva2025mobilehci.pdf},

} S. D. Rodriguez, S. E. Winterberg, F. Bumiller, F. Dietz, F. Alt, and M. Hassibs. XSec Companions: Exploring the Design of Cybersecurity Companions. In Proceedings of the 2025 European Symposium on Usable Security (EuroUSEC ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{delgado2025eurousec,

author = {Sarah Delgado Rodriguez AND Sarah Elisabeth Winterberg AND Franziska Bumiller AND Felix Dietz AND Florian Alt AND Mariam Hassibs},

booktitle = {{Proceedings of the 2025 European Symposium on Usable Security}},

title = {{XSec Companions: Exploring the Design of Cybersecurity Companions}},

year = {2025},

address = {New York, NY, USA},

note = {delgado2025eurousec},

publisher = {Association for Computing Machinery},

series = {EuroUSEC '25},

location = {Strathclyde, UK},

timestamp = {2025.09.17},

url = {http://www.florian-alt.org/unibw/wp-content/publications/delgado2025eurousec.pdf},

} Y. Abdrabou, R. Radiah, A. Fischer, A. Saad, HabibaFarzand, P. Knierim, and F. Alt. Investigating Natural Shoulder Surfing Behaviorin the Wild: A Research Space and Case Study. In Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction (INTERACT ’25), Springer Nature, Cham, Switzerland, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{abdrabou2025interact,

author = {Yasmeen Abdrabou AND Rivu Radiah AND Alessia Fischer AND Alia Saad AND HabibaFarzand AND Pascal Knierim AND Florian Alt},

booktitle = {{Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction}},

title = {{Investigating Natural Shoulder Surfing Behaviorin the Wild: A Research Space and Case Study}},

year = {2025},

address = {Cham, Switzerland},

note = {abdrabou2025interact},

publisher = {Springer Nature},

series = {INTERACT '25},

language = {English},

location = {Belo Horizonte, Brazil},

owner = {florian},

timestamp = {2025.09.03},

url = {http://www.florian-alt.org/unibw/wp-content/publications/abdrabou2025interact.pdf},

} O. Hein, S. Gokcel, and F. Alt. From Ears to Eyes: The Impact of Augmented Text Integration on Audio Guidance. In Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction (INTERACT ’25), Springer Nature, Cham, Switzerland, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{hein2025interact,

author = {Oliver Hein AND Sude Gokcel AND Florian Alt},

booktitle = {{Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction}},

title = {{From Ears to Eyes: The Impact of Augmented Text Integration on Audio Guidance}},

year = {2025},

address = {Cham, Switzerland},

note = {hein2025interact},

publisher = {Springer Nature},

series = {INTERACT '25},

language = {English},

location = {Belo Horizonte, Brazil},

owner = {florian},

timestamp = {2025.09.03},

url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025interact.pdf},

} M. Käsemodel, V. Winterhalter, F. Heisel, F. Alt, and B. Pfleging. BlueTOTP: Designing A Phishing-Resistant and User-Friendly Approach to Two-Factor Authentication. In Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction (INTERACT ’25), Springer Nature, Cham, Switzerland, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{kaesemodel2025interact,

author = {Marian Käsemodel AND Verena Winterhalter AND Felix Heisel AND Florian Alt AND Bastian Pfleging},

booktitle = {{Proceedings of the 20th IFIP TC 13 International Conference on Human-Computer Interaction}},

title = {{BlueTOTP: Designing A Phishing-Resistant and User-Friendly Approach to Two-Factor Authentication}},

year = {2025},

address = {Cham, Switzerland},

note = {kaesemodel2025interact},

publisher = {Springer Nature},

series = {INTERACT '25},

language = {English},

location = {Belo Horizonte, Brazil},

owner = {florian},

timestamp = {2025.09.03},

url = {http://www.florian-alt.org/unibw/wp-content/publications/kaesemodel2025interact.pdf},

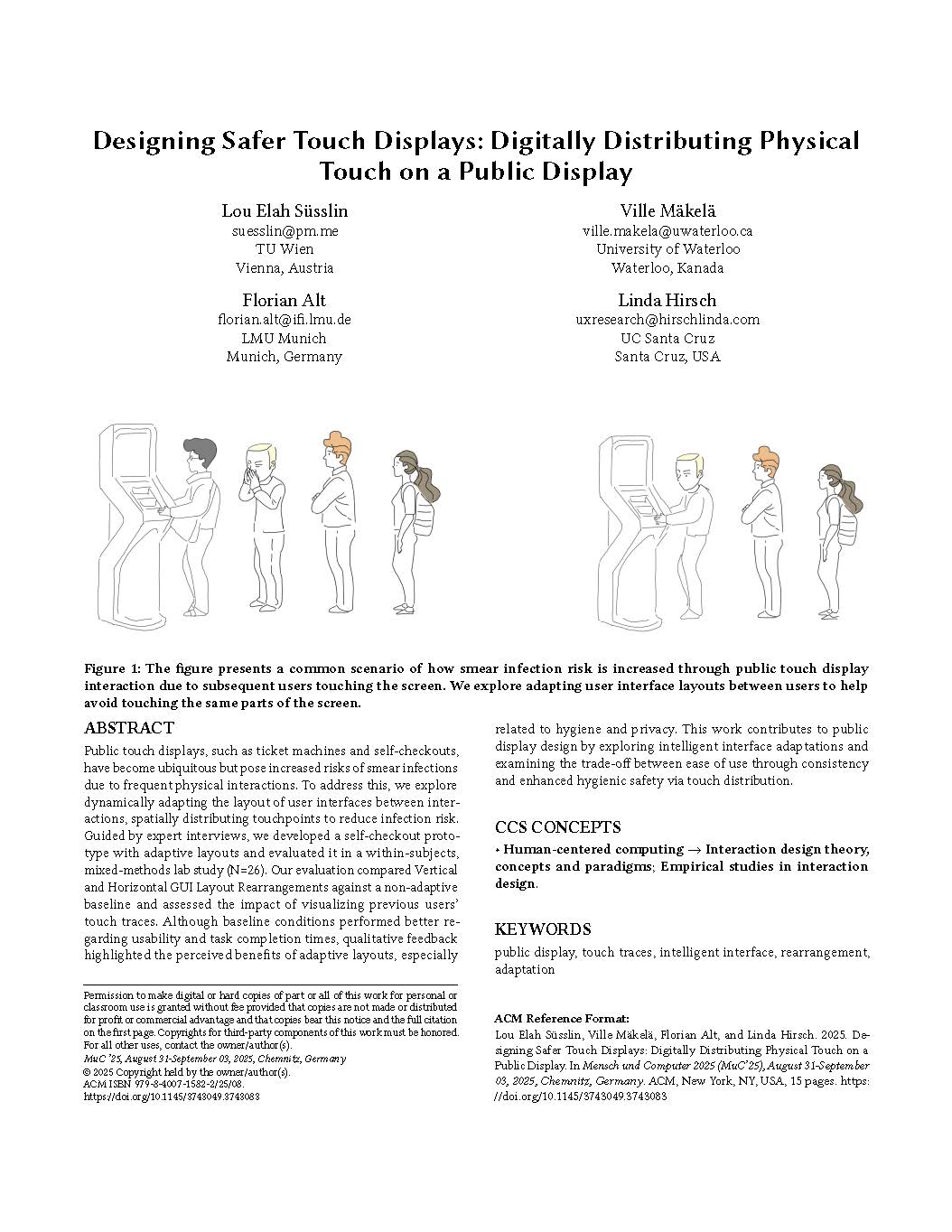

} L. E. Süsslin, V. Mäkelä, F. Alt, and L. Hirsch. Designing Safer Touch Displays: Digitally Distributing Physical Touch on a Public Display. In Proceedings of mensch und computer 2025 (MuC ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{suesslin2025muc,

author = {Süsslin, Lou Elah and M\"{a}kel\"{a}, Ville and Alt, Florian AND Hirsch, Linda},

booktitle = {Proceedings of Mensch Und Computer 2025},

title = {{Designing Safer Touch Displays: Digitally Distributing Physical Touch on a Public Display}},

year = {2025},

address = {New York, NY, USA},

note = {suesslin2025muc},

publisher = {Association for Computing Machinery},

series = {MuC '25},

location = {Chemnitz, Germany},

numpages = {12},

timestamp = {2025.08.30},

url = {http://www.florian-alt.org/unibw/wp-content/publications/suesslin2025muc.pdf},

} A. Triantafyllopoulos, A. A. Spiesberger, I. Tsangko, X. Jing, V. Distler, F. Dietz, F. Alt, and B. W. Schuller. Vishing: detecting social engineering in spoken communication — a first survey & urgent roadmap to address an emerging societal challenge. Computer speech & language, vol. 94, p. 101802, 2025. doi:https://doi.org/10.1016/j.csl.2025.101802

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

Vishing – the use of voice calls for phishing – is a form of Social Engineering (SE) attacks. The latter have become a pervasive challenge in modern societies, with over 300,000 yearly victims in the US alone. An increasing number of those attacks is conducted via voice communication, be it through machine-generated ‘robocalls’ or human actors. The goals of ‘social engineers’ can be manifold, from outright fraud to more subtle forms of persuasion. Accordingly, social engineers adopt multi-faceted strategies for voice-based attacks, utilising a variety of ‘tricks’ to exert influence and achieve their goals. Importantly, while organisations have set in place a series of guardrails against other types of SE attacks, voice calls still remain ‘open ground’ for potential bad actors. In the present contribution, we provide an overview of the existing speech technology subfields that need to coalesce into a protective net against one of the major challenges to societies worldwide. Given the dearth of speech science and technology works targeting this issue, we have opted for a narrative review that bridges the gap between the existing psychological literature on the topic and research that has been pursued in parallel by the speech community on some of the constituent constructs. Our review reveals that very little literature exists on addressing this very important topic from a speech technology perspective, an omission further exacerbated by the lack of available data. Thus, our main goal is to highlight this gap and sketch out a roadmap to mitigate it, beginning with the psychological underpinnings of vishing, which primarily include deception and persuasion strategies, continuing with the speech-based approaches that can be used to detect those, as well as the generation and detection of AI-based vishing attempts, and close with a discussion of ethical and legal considerations.

@Article{triantafyllopoulos2025csl,

author = {Andreas Triantafyllopoulos and Anika A. Spiesberger and Iosif Tsangko and Xin Jing and Verena Distler and Felix Dietz and Florian Alt and Björn W. Schuller},

journal = {Computer Speech & Language},

title = {Vishing: Detecting social engineering in spoken communication — A first survey & urgent roadmap to address an emerging societal challenge},

year = {2025},

issn = {0885-2308},

note = {triantafyllopoulos2025csl},

pages = {101802},

volume = {94},

abstract = {Vishing – the use of voice calls for phishing – is a form of Social Engineering (SE) attacks. The latter have become a pervasive challenge in modern societies, with over 300,000 yearly victims in the US alone. An increasing number of those attacks is conducted via voice communication, be it through machine-generated ‘robocalls’ or human actors. The goals of ‘social engineers’ can be manifold, from outright fraud to more subtle forms of persuasion. Accordingly, social engineers adopt multi-faceted strategies for voice-based attacks, utilising a variety of ‘tricks’ to exert influence and achieve their goals. Importantly, while organisations have set in place a series of guardrails against other types of SE attacks, voice calls still remain ‘open ground’ for potential bad actors. In the present contribution, we provide an overview of the existing speech technology subfields that need to coalesce into a protective net against one of the major challenges to societies worldwide. Given the dearth of speech science and technology works targeting this issue, we have opted for a narrative review that bridges the gap between the existing psychological literature on the topic and research that has been pursued in parallel by the speech community on some of the constituent constructs. Our review reveals that very little literature exists on addressing this very important topic from a speech technology perspective, an omission further exacerbated by the lack of available data. Thus, our main goal is to highlight this gap and sketch out a roadmap to mitigate it, beginning with the psychological underpinnings of vishing, which primarily include deception and persuasion strategies, continuing with the speech-based approaches that can be used to detect those, as well as the generation and detection of AI-based vishing attempts, and close with a discussion of ethical and legal considerations.},

doi = {https://doi.org/10.1016/j.csl.2025.101802},

keywords = {Vishing, Social engineering, Human–computer interaction, Computational paralinguistics},

timestamp = {2025.08.06},

url = {https://www.sciencedirect.com/science/article/pii/S0885230825000270},

} F. Sharevski, V. Distler, and F. Alt. Helps me Take the Post With a Grain of Salt:” Soft Moderation Effects on Accuracy Perceptions and Sharing Intentions of Inauthentic Political Content on X. In 34th usenix security symposium (usenix security 25) (), USENIX Association, Seattle, WA, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{sharevski2025usenixsecurity,

author = {Filipo Sharevski AND Verena Distler AND Florian Alt},

booktitle = {34th USENIX Security Symposium (USENIX Security 25)},

title = {{Helps me Take the Post With a Grain of Salt:" Soft Moderation Effects on Accuracy Perceptions and Sharing Intentions of Inauthentic Political Content on X}},

year = {2025},

address = {Seattle, WA},

month = aug,

note = {sharevski2025usenixsecurity},

publisher = {USENIX Association},

timestamp = {2025.08.05},

url = {http://florian-alt.org/unibw/wp-content/publications/sharevski2025usenixsecurity.pdf},

} S. Delgado Rodriguez, L. Mecke, I. Prieto Romero, M. Bregenzer, and F. Alt. Let the Door Handle It: Exploring Biometric User Recognition Embedded in Doors. In Proceedings of mensch und computer 2025 (MuC ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{delgado2025muc,

author = {Delgado Rodriguez, Sarah AND Mecke, Lukas AND Prieto Romero, Ismael AND Bregenzer, Melina AND Alt, Florian},

booktitle = {Proceedings of Mensch Und Computer 2025},

title = {{Let the Door Handle It: Exploring Biometric User Recognition Embedded in Doors}},

year = {2025},

address = {New York, NY, USA},

note = {delgado2025muc},

publisher = {Association for Computing Machinery},

series = {MuC '25},

location = {Chemnitz, Germany},

numpages = {12},

timestamp = {2025.08.04},

url = {http://www.florian-alt.org/unibw/wp-content/publications/delgado2025muc.pdf},

} O. Hein, S. Wackerl, C. Ou, F. Alt, and F. Chiossi. At the Speed of the Heart: Evaluating Physiologically-Adaptive Visualizations for Supporting Engagement in Biking Exergaming in Virtual Reality. In Proceedings of annual conference on human-computer interaction and sports (SportsHCI ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{hein2025sportshci,

author = {Oliver Hein AND Sandra Wackerl AND Changkun Ou AND Florian Alt and Francesco Chiossi},

booktitle = {Proceedings of Annual Conference on Human-Computer Interaction and Sports},

title = {{At the Speed of the Heart: Evaluating Physiologically-Adaptive Visualizations for Supporting Engagement in Biking Exergaming in Virtual Reality}},

year = {2025},

address = {New York, NY, USA},

note = {hein2025sportshci},

publisher = {Association for Computing Machinery},

series = {SportsHCI '25},

location = {Enschede, The Netherlands},

numpages = {12},

timestamp = {2025.07.04},

url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025sportshci.pdf},

} V. Winterhalter, V. Paneva, and F. Alt. Enhancing user engagement with game-inspired privacy interfaces in virtual reality. In Adjunct proceedings of the twenty-first symposium on usable privacy and security (SOUPS ’25), USENIX Association, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{winterhalter2025soupsadj2,

author = {Verena Winterhalter AND Viktorija Paneva AND Alt, Florian},

booktitle = {Adjunct Proceedings of the Twenty-First Symposium on Usable Privacy and Security},

title = {Enhancing User Engagement with Game-Inspired Privacy Interfaces in Virtual Reality},

year = {2025},

note = {winterhalter2025soupsadj2},

publisher = {USENIX Association},

series = {SOUPS '25},

timestamp = {2025.06.05},

url = {http://florian-alt.org/unibw/wp-content/publications/winterhalter2025soupsadj2.pdf},

} V. Winterhalter, A. Moreno, S. Prange, H. Berger, and F. Alt. Permwatch: a tool for in-situ research on users’ awareness and control of android permissions. In Adjunct proceedings of the twenty-first symposium on usable privacy and security (SOUPS ’25), USENIX Association, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{winterhalter2025soupsadj2,

author = {Verena Winterhalter AND Anouk Moreno AND Sarah Prange AND Harel Berger AND Florian Alt},

booktitle = {Adjunct Proceedings of the Twenty-First Symposium on Usable Privacy and Security},

title = {PermWatch: A Tool for In-Situ Research on Users' Awareness and Control of Android Permissions},

year = {2025},

note = {winterhalter2025soupsadj2},

publisher = {USENIX Association},

series = {SOUPS '25},

timestamp = {2025.06.05},

url = {http://florian-alt.org/unibw/wp-content/publications/winterhalter2025soupsadj2.pdf},

} V. Paneva, V. Winterhalter, N. S. S. V. Malladi, M. Strauss, S. Schneegass, and F. Alt, Usable privacy in virtual worlds: design implications for data collection awareness and control interfaces in virtual reality, 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@Misc{paneva2025arxiv,

author = {Viktorija Paneva and Verena Winterhalter and Naga Sai Surya Vamsy Malladi and Marvin Strauss and Stefan Schneegass and Florian Alt},

note = {paneva2025arxiv},

title = {Usable Privacy in Virtual Worlds: Design Implications for Data Collection Awareness and Control Interfaces in Virtual Reality},

year = {2025},

archiveprefix = {arXiv},

eprint = {2503.10915},

primaryclass = {cs.HC},

timestamp = {2025.05.13},

url = {http://www.florian-alt.org/unibw/wp-content/publications/paneva2025arxiv.pdf},

} D. Murtezaj, V. Paneva, and F. Alt. Ai-augmented public security interfaces: advancingcybersecurity awareness through adaptive learning. In Proceedings of the 2025 chi workshop on augmented educators and ai: shaping the future of human-ai collaboration in learning (), 2025.

[BibTeX] [PDF]

[BibTeX] [PDF]

@InProceedings{murtezaj2025chiws,

author = {Dortuntina Murtezaj AND Viktorija Paneva AND Florian Alt},

booktitle = {Proceedings of the 2025 CHI Workshop on Augmented Educators and AI: Shaping the Future of Human-AI Collaboration in Learning},

title = {AI-Augmented Public Security Interfaces: AdvancingCybersecurity Awareness through Adaptive Learning},

year = {2025},

note = {murtezaj2025chiws},

timestamp = {2025.05.01},

url = {http://www.florian-alt.org/unibw/wp-content/publications/murtezaj2025chiws.pdf},

} L. Mecke, A. Mahmoud, S. Marat, and F. Alt. Exploring the effect of music on user typing and identificationthrough keystroke dynamics. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ’25), Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3706598.3713222

[BibTeX] [Abstract] [PDF] [Video]

[BibTeX] [Abstract] [PDF] [Video]

This paper explores the relationship between music and keyboard typing behavior. In particular, we focus on how it affects keystroke-based authentication systems. To this end, we conducted an online experiment (N=43), where participants were asked to replicate paragraphs of text while listening to music at varying tempos and loudness levels across two sessions. Our findings reveal that listening to music leads to more errors and faster typing if the music is fast. Identification through a biometric model was improved when music was played either during its training or testing. This hints at the potential of music for increasing identification performance and a tradeoff between this benefit and user distraction. Overall, our research sheds light on typing behavior and introduces music as a subtle and effective tool to influence user typing behavior in the context of keystroke-based authentication.

@InProceedings{mecke2025chi,

author = {Lukas Mecke AND Assem Mahmoud AND Simon Marat AND Florian Alt},

booktitle = {{Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}},

title = {Exploring the Effect of Music on User Typing and Identificationthrough Keystroke Dynamics},

year = {2025},

address = {New York, NY, USA},

note = {mecke2025chi},

publisher = {Association for Computing Machinery},

series = {CHI ’25},

abstract = {This paper explores the relationship between music and keyboard typing behavior. In particular, we focus on how it affects keystroke-based authentication systems. To this end, we conducted an online experiment (N=43), where participants were asked to replicate paragraphs of text while listening to music at varying tempos and loudness levels across two sessions. Our findings reveal that listening to music leads to more errors and faster typing if the music is fast. Identification through a biometric model was improved when music was played either during its training or testing. This hints at the potential of music for increasing identification performance and a tradeoff between this benefit and user distraction. Overall, our research sheds light on typing behavior and introduces music as a subtle and effective tool to influence user typing behavior in the context of keystroke-based authentication.},

doi = {10.1145/3706598.3713222},

isbn = {979-8-4007-1394-1/25/04},

location = {Yokohama, Japan},

timestamp = {2025.04.24},

url = {http://florian-alt.org/unibw/wp-content/publications/mecke2025chi.pdf},

video = {mecke2025chi},

} S. D. Rodriguez, M. Windl, F. Alt, and K. Marky. The TaPSI Research Framework – A Systematization of Knowledge on Tangible Privacy and Security Interfaces. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ’25), Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3706598.3713968

[BibTeX] [Abstract] [PDF] [Video]

[BibTeX] [Abstract] [PDF] [Video]

This paper presents a comprehensive Systematization of Knowledge on tangible privacy and security interfaces (TaPSI). Tangible interfaces provide physical forms for digital interactions. They can offer significant benefits for privacy and security applications by making complex and abstract security concepts more intuitive, comprehensible, and engaging. Through a literature survey, we collected and analyzed \inscopeall{} publications. We identified terminology used in these publications and addressed usable privacy and security domains, contributions, applied methods, implementation details, and opportunities or challenges inherent to TaPSI. Based on our findings, we define TaPSI and propose the TaPSI Research Framework, which guides future research by offering insights into when and how to conduct research on privacy and security involving TaPSI as well as a design space of TaPSI.

@InProceedings{delgado2025chi,

author = {Sarah Delgado Rodriguez AND Maximiliane Windl AND Florian Alt AND Karola Marky},

booktitle = {{Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}},

title = {{The TaPSI Research Framework – A Systematization of Knowledge on Tangible Privacy and Security Interfaces}},

year = {2025},

address = {New York, NY, USA},

note = {delgado2025chi},

publisher = {Association for Computing Machinery},

series = {CHI ’25},

abstract = {This paper presents a comprehensive Systematization of Knowledge on tangible privacy and security interfaces (TaPSI). Tangible interfaces provide physical forms for digital interactions. They can offer significant benefits for privacy and security applications by making complex and abstract security concepts more intuitive, comprehensible, and engaging. Through a literature survey, we collected and analyzed \inscopeall{} publications.

We identified terminology used in these publications and addressed usable privacy and security domains, contributions, applied methods, implementation details, and opportunities or challenges inherent to TaPSI.

Based on our findings, we define TaPSI and propose the TaPSI Research Framework, which guides future research by offering insights into when and how to conduct research on privacy and security involving TaPSI as well as a design space of TaPSI.},

doi = {10.1145/3706598.3713968},

isbn = {979-8-4007-1394-1/25/04},

location = {Yokohama, Japan},

timestamp = {2025.04.24},

url = {http://florian-alt.org/unibw/wp-content/publications/delgado2025chi.pdf},

video = {delgado2025chi},

} J. Liebers, F. Bernardi, A. Saad, L. Mecke, U. Gruenefeld, F. Alt, and S. Schneegass. Predicting Personality Traits from Hand-Tracking and Typing Behavior in Extended Reality under the Presence and Absence of Haptic Feedback. In Extended Abstracts of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ’25), Association for Computing Machinery, New York, NY, USA, 2025.

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

With the proliferation of extended realities, it becomes increasingly important to create applications that adapt themselves to the user, which enhances the user experience. One source that allows for adaptation is users’ behavior, which is implicitly captured on XR devices, such as their hand and finger movements during natural interactions. This data can be used to predict a user’s personality traits, which allows the application to accustom itself to the user’s needs. In this study (N=20), we explore personality prediction from hand-tracking and keystroke data during a typing activity in Augmented Virtuality and Virtual Reality. We manipulate the haptic elements, i.e., whether users type on a physical or virtual keyboard, and capture data from participants on two different days. We find a best-performing model with an R2 of 0.4456, with the error source stemming from the manifestation of XR , and that the hand-tracking data contributes most of the prediction power.

@InProceedings{liebers2025chilbw,

author = {Jonathan Liebers AND Felix Bernardi AND Alia Saad AND Lukas Mecke and Uwe Gruenefeld AND Florian Alt AND Stefan Schneegass},

booktitle = {{Extended Abstracts of the 2025 CHI Conference on Human Factors in Computing Systems}},

title = {{Predicting Personality Traits from Hand-Tracking and Typing Behavior in Extended Reality under the Presence and Absence of Haptic Feedback}},

year = {2025},

address = {New York, NY, USA},

note = {liebers2025chilbw},

publisher = {Association for Computing Machinery},

series = {CHI ’25},

abstract = {With the proliferation of extended realities, it becomes increasingly important to create applications that adapt themselves to the user, which enhances the user experience. One source that allows for adaptation is users’ behavior, which is implicitly captured on XR devices, such as their hand and finger movements during natural interactions. This data can be used to predict a user’s personality traits, which allows the application to accustom itself to the user’s needs. In this study (N=20), we explore personality prediction from hand-tracking and keystroke data during a typing activity in Augmented Virtuality and Virtual Reality. We manipulate the haptic elements, i.e., whether users type on a physical or virtual keyboard, and capture data from participants on two different days. We find a best-performing model with an R2 of 0.4456, with the error source stemming from the manifestation of XR , and that the hand-tracking data contributes most of the prediction power.},

location = {Yokohama, Japan},

timestamp = {2025.04.23},

url = {http://florian-alt.org/unibw/wp-content/publications/liebers2025chilbw.pdf},

} O. Hein, D. Hirschberg, F. Alt, and P. Rauschnabel. Augmented reality in command and control processes: a comparison of presentation types for the transmission of auditive orders. In Proceedings of the international xr-metaverse conference 2025 (XRM ’25), Springer, 2025.

[BibTeX] [Abstract]

[BibTeX] [Abstract]

Augmented Reality (AR) in Command and Control Processes: A Comparison of Presentation Types for the Transmission of Auditory Orders Traditional radio communication in emergency and military operations is hindered by auditory overload, lack of visual reinforcement, and susceptibility to environmental distractions. Augmented Reality (AR) presents a promising solution by integrating visual cues with auditory messages to improve efficiency and reduce cognitive strain. This study investigates different AR-based instruction delivery methods through two independent experiments. Study A (n=200) examines the impact of continuous text vs. bullet-point presentation in AR on cognitive load and accuracy in a drawing task. Results indicate that while bullet points enable faster processing, they also lead to more errors, whereas continuous text improves accuracy but increases cognitive effort. Study B (n=9) compares audio-only, audio + text, and audio + symbols instruction methods. Findings reveal that AR-enhanced approaches significantly reduce errors and are preferred by users over audio-only instructions, demonstrating their potential in high-stress command-and-control environments. These findings align with Cognitive Load Theory (CLT), highlighting the importance of adaptive information presentation to optimize task performance and user experience. The study underscores the potential of AR to revolutionize emergency response and military communications, providing more resilient, multi-modal instruction systems for critical operations.

@InProceedings{hein2025xrm,

author = {Oliver Hein and Dominik Hirschberg AND Florian Alt AND Philipp Rauschnabel},

booktitle = {Proceedings of the International XR-Metaverse Conference 2025},

title = {Augmented Reality in Command and Control Processes: A Comparison of Presentation Types for the Transmission of Auditive Orders},

year = {2025},

note = {hein2025xrm},

publisher = {Springer},

series = {XRM '25},

abstract = {Augmented Reality (AR) in Command and Control Processes: A Comparison of Presentation Types for the Transmission of Auditory Orders Traditional radio communication in emergency and military operations is hindered by auditory overload, lack of visual reinforcement, and susceptibility to environmental distractions. Augmented Reality (AR) presents a promising solution by integrating visual cues with auditory messages to improve efficiency and reduce cognitive strain. This study investigates different AR-based instruction delivery methods through two independent experiments. Study A (n=200) examines the impact of continuous text vs. bullet-point presentation in AR on cognitive load and accuracy in a drawing task. Results indicate that while bullet points enable faster processing, they also lead to more errors, whereas continuous text improves accuracy but increases cognitive effort. Study B (n=9) compares audio-only, audio + text, and audio + symbols instruction methods. Findings reveal that AR-enhanced approaches significantly reduce errors and are preferred by users over audio-only instructions, demonstrating their potential in high-stress command-and-control environments. These findings align with Cognitive Load Theory (CLT), highlighting the importance of adaptive information presentation to optimize task performance and user experience. The study underscores the potential of AR to revolutionize emergency response and military communications, providing more resilient, multi-modal instruction systems for critical operations.},

timestamp = {2025.04.10},

} P. Rauschnabel, R. Felix, M. Meissner, F. Alt, and C. Hinsch. Spatial computing: key concepts, implications, and research agenda. In Proceedings of the international xr-metaverse conference 2025 (XRM ’25), Springer, 2025.

[BibTeX] [Abstract]

[BibTeX] [Abstract]

Spatial computing has recently emerged as a promising concept in business, driven by technological advancements and key industry players. In 2023, Apple introduced the term spatial computing alongside the launch of the Apple Vision Pro headset, marking a significant shift in how this technology is perceived and applied across industries. However, while concepts such as Extended Reality or xReality (XR), Augmented Reality (AR), Virtual Reality (VR), and the Metaverse are well-established in both management practice and academic literature, research on spatial computing remains sparse, particularly in a business context. The current research (work-in-progress) addresses this gap by (1) delineating spatial computing from related concepts, and (2) developing a conceptual framework that identifies spatial computing core elements, outcomes, and strategies

@InProceedings{rauschnabel2025xrm,

author = {Philipp Rauschnabel AND Reto Felix AND Martin Meissner AND Florian Alt AND Chris Hinsch},

booktitle = {Proceedings of the International XR-Metaverse Conference 2025},

title = {Spatial Computing: Key Concepts, Implications, and Research Agenda},

year = {2025},

note = {rauschnabel2025xrm},

publisher = {Springer},

series = {XRM '25},

abstract = {Spatial computing has recently emerged as a promising concept in business, driven by technological advancements and key industry players. In 2023, Apple introduced the term spatial computing alongside the launch of the Apple Vision Pro headset, marking a significant shift in how this technology is perceived and applied across industries. However, while concepts such as Extended Reality or xReality (XR), Augmented Reality (AR), Virtual Reality (VR), and the Metaverse are well-established in both management practice and academic literature, research on spatial computing remains sparse, particularly in a business context. The current research (work-in-progress) addresses this gap by (1) delineating spatial computing from related concepts, and (2) developing a conceptual framework that identifies spatial computing core elements, outcomes, and strategies},

timestamp = {2025.04.10},

} O. Hein, A. Saad, Y. Abdelrahman, and F. Alt. Secured augmentation: on-body security and safety interfaces. In Proceedings of the augmented humans international conference 2025 (AHs ’25), Association for Computing Machinery, New York, NY, USA, 2025, p. 497–499. doi:10.1145/3745900.3746128

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

With the rapid integration of wearable sensors and head-mounted displays (HMDs), ensuring security and safety in human-computer interaction has never been more critical. The On-Body Security and Safety Interfaces workshop explores the intersection of biometric authentication, safety- and privacy-preserving wearables, physiological sensing, and safety- and security-driven augmented interfaces. Key topics include novel authentication methods for wearable devices, privacy-preserving techniques for continuous physiological monitoring, secure interaction paradigms for AR and VR environments, and adaptive safety mechanisms that enhance user trust and system reliability. Through discussions and collaborative sessions, this workshop aims to foster new ideas and interdisciplinary approaches to ensuring secure, safe, and user-friendly on-body computing. By addressing both emerging challenges and future opportunities, this workshop seeks to pave the way for more resilient, privacy-conscious, and intelligent wearable and augmented systems that prioritize user well-being while maintaining seamless interaction experiences.

@InProceedings{hein2025ahsws,

author = {Hein, Oliver and Saad, Alia and Abdelrahman, Yomna and Alt, Florian},

booktitle = {Proceedings of the Augmented Humans International Conference 2025},

title = {Secured Augmentation: On-Body Security and Safety Interfaces},

year = {2025},

address = {New York, NY, USA},

note = {hein2025ahsws},

pages = {497–499},

publisher = {Association for Computing Machinery},

series = {AHs '25},

abstract = {With the rapid integration of wearable sensors and head-mounted displays (HMDs), ensuring security and safety in human-computer interaction has never been more critical. The On-Body Security and Safety Interfaces workshop explores the intersection of biometric authentication, safety- and privacy-preserving wearables, physiological sensing, and safety- and security-driven augmented interfaces. Key topics include novel authentication methods for wearable devices, privacy-preserving techniques for continuous physiological monitoring, secure interaction paradigms for AR and VR environments, and adaptive safety mechanisms that enhance user trust and system reliability. Through discussions and collaborative sessions, this workshop aims to foster new ideas and interdisciplinary approaches to ensuring secure, safe, and user-friendly on-body computing. By addressing both emerging challenges and future opportunities, this workshop seeks to pave the way for more resilient, privacy-conscious, and intelligent wearable and augmented systems that prioritize user well-being while maintaining seamless interaction experiences.},

doi = {10.1145/3745900.3746128},

isbn = {9798400715662},

keywords = {Security, Privacy, Safety, Mixed Reality, Physiological Sensing, Interface Design},

numpages = {3},

timestamp = {2025.03.16},

url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025ahsws.pdf},

} O. Hein, A. Al Qalaq, and F. Alt. Beyond boundaries: exploring augmented reality barrier-bypassing display modalities. In Proceedings of the augmented humans international conference 2025 (AHs ’25), Association for Computing Machinery, New York, NY, USA, 2025, p. 1–6. doi:10.1145/3745900.3746102

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

This paper investigates different visualization methods in augmented reality (AR) to display obscured spaces or objects by physical barriers. Using a prototype system that combines 3D scans and AR visualization through a Meta Quest Pro, we explore the usability and effectiveness of two display modalities: point cloud and mesh visualizations. A user study with ten participants evaluates these methods across task completion time, and user experience. Results indicate a significant preference for mesh visualizations, which outperform point cloud representations in attractiveness, efficiency, and usability. These findings have relevant implications for AR applications in emergency response, construction, and other domains requiring enhanced visualization of hidden objects.

@InProceedings{hein2025ahs,

author = {Hein, Oliver and Al Qalaq, Adnan and Alt, Florian},

booktitle = {Proceedings of the Augmented Humans International Conference 2025},

title = {Beyond Boundaries: Exploring Augmented Reality Barrier-Bypassing Display Modalities},

year = {2025},

address = {New York, NY, USA},

note = {hein2025ahs},

pages = {1–6},

publisher = {Association for Computing Machinery},

series = {AHs '25},

abstract = {This paper investigates different visualization methods in augmented reality (AR) to display obscured spaces or objects by physical barriers. Using a prototype system that combines 3D scans and AR visualization through a Meta Quest Pro, we explore the usability and effectiveness of two display modalities: point cloud and mesh visualizations. A user study with ten participants evaluates these methods across task completion time, and user experience. Results indicate a significant preference for mesh visualizations, which outperform point cloud representations in attractiveness, efficiency, and usability. These findings have relevant implications for AR applications in emergency response, construction, and other domains requiring enhanced visualization of hidden objects.},

doi = {10.1145/3745900.3746102},

isbn = {9798400715662},

keywords = {Augmented Reality, 3D Visualization, User Experience},

location = {Abu Dhabu, UAE},

numpages = {6},

timestamp = {2025.03.14},

url = {http://www.florian-alt.org/unibw/wp-content/publications/hein2025ahs.pdf},

} J. Schwab, A. Nussbaum, A. Sergeeva, F. Alt, and V. Distler. What Makes Phishing Simulation Campaigns (Un)Acceptable? A Vignette Experiment. In Proceedings of the Usable Security Mini Conference 2025 (USEC’25), Internet Society, San Diego, CA, USA, 2025. doi:https://dx.doi.org/10.14722/usec.2025.23010

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

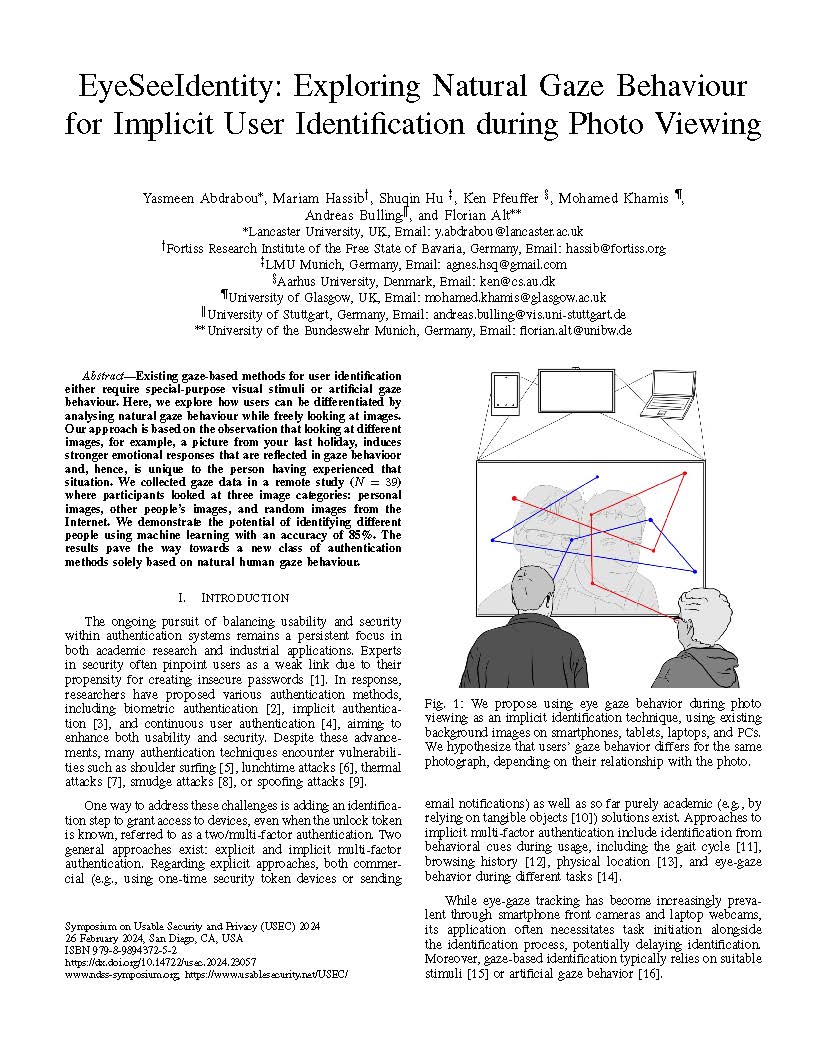

Existing gaze-based methods for user identification either require special-purpose visual stimuli or artificial gaze behavior. Here, we explore how users can be differentiated by analyzing natural gaze behavior while freely looking at images. Our approach is based on the observation that looking at different images, for example, a picture from your last holiday, induces stronger emotional responses that are reflected in gaze behavior and, hence, are unique to the person having experienced that situation. We collected gaze data in a remote study ($N=39$) where participants looked at three image categories: personal images, other people’s images, and random images from the Internet. We demonstrate the potential of identifying different people using machine learning with an accuracy of 85\%. The results pave the way for a new class of authentication methods solely based on natural human gaze behavior.

@InProceedings{schwab2025usec,

author = {Jasmin Schwab AND Alexander Nussbaum AND Anastasia Sergeeva AND Florian Alt AND Verena Distler},

booktitle = {{Proceedings of the Usable Security Mini Conference 2025}},

title = {{What Makes Phishing Simulation Campaigns (Un)Acceptable? A Vignette Experiment}},

year = {2025},

address = {San Diego, CA, USA},

note = {schwab2025usec},

publisher = {Internet Society},

series = {USEC'25},

abstract = {Existing gaze-based methods for user identification either require special-purpose visual stimuli or artificial gaze behavior. Here, we explore how users can be differentiated by analyzing natural gaze behavior while freely looking at images. Our approach is based on the observation that looking at different images, for example, a picture from your last holiday, induces stronger emotional responses that are reflected in gaze behavior and, hence, are unique to the person having experienced that situation. We collected gaze data in a remote study ($N=39$) where participants looked at three image categories: personal images, other people's images, and random images from the Internet. We demonstrate the potential of identifying different people using machine learning with an accuracy of 85\%. The results pave the way for a new class of authentication methods solely based on natural human gaze behavior.},

doi = {https://dx.doi.org/10.14722/usec.2025.23010},

isbn = {79-8-9894372-5-2},

owner = {florian},

timestamp = {2025.02.26},

url = {http://www.florian-alt.org/unibw/wp-content/publications/schwab2025usec.pdf},

}2024

D. Murtezaj, V. Paneva, V. Distler, and F. Alt. A platform for physiological and behavioral security. In Proceedings of the new security paradigms workshop (NSPW ’24), Association for Computing Machinery, New York, NY, USA, 2024, p. 56–70. doi:10.1145/3703465.3703470

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

We introduce the concept of Public Security User Interfaces as an innovative approach to enhancing cybersecurity awareness and promoting security behavior change among users in public spaces. We envision these interfaces as dynamic platforms that leverage interactive elements and contextual cues to deliver timely security information and guidance to users. We identify four key objectives: raising awareness, triggering actions, providing control, and sparking conversation. Drawing upon Sasse et al.’s Security Learning Curve, we outline the stages for supporting users in adopting new security-related routines into habits, encompassing knowledge, concordance, self-efficacy, implementation, embedding, and secure behavior. Insights from research on public displays and spontaneous interactions inform the design of public security user interfaces tailored to different environments and user groups. Furthermore, we propose research questions pertaining to stakeholders, content, user interface design, and effects on users and discuss challenges as well as limitations. Introducing public security user interfaces to bridge the gap between cybersecurity experts and lay users sets the stage for future research and development in this emerging field.

@InProceedings{murtezaj2024nspw,

author = {Murtezaj, Doruntina and Paneva, Viktorija and Distler, Verena and Alt, Florian},

booktitle = {Proceedings of the New Security Paradigms Workshop},

title = {A Platform for Physiological and Behavioral Security},

year = {2024},

address = {New York, NY, USA},

note = {murtezaj2024nspw},

pages = {56–70},

publisher = {Association for Computing Machinery},

series = {NSPW '24},

abstract = {We introduce the concept of Public Security User Interfaces as an innovative approach to enhancing cybersecurity awareness and promoting security behavior change among users in public spaces. We envision these interfaces as dynamic platforms that leverage interactive elements and contextual cues to deliver timely security information and guidance to users. We identify four key objectives: raising awareness, triggering actions, providing control, and sparking conversation. Drawing upon Sasse et al.’s Security Learning Curve, we outline the stages for supporting users in adopting new security-related routines into habits, encompassing knowledge, concordance, self-efficacy, implementation, embedding, and secure behavior. Insights from research on public displays and spontaneous interactions inform the design of public security user interfaces tailored to different environments and user groups. Furthermore, we propose research questions pertaining to stakeholders, content, user interface design, and effects on users and discuss challenges as well as limitations. Introducing public security user interfaces to bridge the gap between cybersecurity experts and lay users sets the stage for future research and development in this emerging field.},

doi = {10.1145/3703465.3703470},

isbn = {9798400711282},

keywords = {Security User Interfaces, Privacy Awareness, Public Displays, Behaviour Change},

numpages = {15},

timestamp = {2025.01.01},

url = {http://www.florian-alt.org/unibw/wp-content/publications/murtezaj2024nspw.pdf},

} J. Janeiro, S. Alves, T. Guerreiro, V. Distler, and F. Alt. Understanding phishing experiences of screen reader users. Ieee security & privacy, 2024.

[BibTeX] [PDF]

[BibTeX] [PDF]

@Article{janeiro2024ieeesp,

author = {Joao Janeiro AND Sergio Alves AND Tiago Guerreiro AND Verena Distler AND Florian Alt},

journal = {IEEE Security \& Privacy},

title = {Understanding Phishing Experiences of Screen Reader Users},

year = {2024},

month = dec,

note = {janeiro2024ieeesp},

timestamp = {2024.12.09},

url = {http://www.florian-alt.org/unibw/wp-content/publications/janeiro2024ieeesp.pdf},

} V. Paneva, M. Strauss, V. Winterhalter, S. Schneegass, and F. Alt. Privacy in the metaverse. Ieee pervasive computing, vol. 20, iss. 4, p. 5, 2024. doi:10.1109/MPRV.2024.3432953

[BibTeX] [PDF]

[BibTeX] [PDF]

@Article{paneva2024ieeepvc,

author = {Viktorija Paneva AND Marvin Strauss AND Verena Winterhalter AND Stefan Schneegass AND Florian Alt},

journal = {IEEE Pervasive Computing},

title = {Privacy in the Metaverse},

year = {2024},

month = dec,

note = {paneva2024ieeepvc},

number = {4},

pages = {5},

volume = {20},

doi = {10.1109/MPRV.2024.3432953},

timestamp = {2024.12.09},

url = {http://www.florian-alt.org/unibw/wp-content/publications/paneva2024ieeepvc.pdf},

} Proceedings of the acm on human-computer interaction: interactive surfaces and spaces trackNew York, NY, USA: Association for Computing Machinery, 2024.

[BibTeX] [PDF]

[BibTeX] [PDF]

@Proceedings{houben2024iss,

title = {Proceedings of the ACM on Human-Computer Interaction: Interactive Surfaces and Spaces Track},

year = {2024},

address = {New York, NY, USA},

note = {houben2024iss},

publisher = {Association for Computing Machinery},

editors = {Steven Houben and Gun Lee and Florian Alt AND Mark Hancock},

location = {Vancouver, VC, Canada},

timestamp = {2024.11.05},

url = {http://www.florian-alt.org/unibw/wp-content/publications/houoben2024iss.pdf},

} F. Dietz, L. Mecke, D. Riesner, and F. Alt. Delusio – Plausible Deniability For Face Recognition. Proceedings of the acm on human-computer interaction, vol. 6, iss. MHCI, 2024. doi:10.1145/3676494

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

We developed an Android phone unlock mechanism utilizing facial recognition and specific mimics to access a specially secured portion of the device, designed for plausible deniability. The widespread adoption of biometric authentication methods, such as fingerprint and facial recognition, has revolutionized mobile device security, offering enhanced protection against shoulder-surfing attacks and improving user convenience compared to traditional passwords. However, a downside is the potential for coercion by third parties to unlock the device. While text-based authentication allows users to reveal a hidden system by entering a special password, this is challenging with face authentication. We evaluated our approach in a role-playing user study involving 50 participants, with one participant acting as the attacker and the other as the suspect. Suspects successfully accessed the secured area; mostly without detection. They further expressed interest in this feature on their personal phones. We also discuss open challenges and opportunities in implementing such authentication mechanisms.

@Journal{dietz2024mobilehci,

abstract = {We developed an Android phone unlock mechanism utilizing facial recognition and specific mimics to access a specially secured portion of the device, designed for plausible deniability. The widespread adoption of biometric authentication methods, such as fingerprint and facial recognition, has revolutionized mobile device security, offering enhanced protection against shoulder-surfing attacks and improving user convenience compared to traditional passwords. However, a downside is the potential for coercion by third parties to unlock the device. While text-based authentication allows users to reveal a hidden system by entering a special password, this is challenging with face authentication. We evaluated our approach in a role-playing user study involving 50 participants, with one participant acting as the attacker and the other as the suspect. Suspects successfully accessed the secured area; mostly without detection. They further expressed interest in this feature on their personal phones. We also discuss open challenges and opportunities in implementing such authentication mechanisms.},

address = {New York, NY, USA},

articleno = {249},

author = {Felix Dietz AND Lukas Mecke AND Daniel Riesner AND Florian Alt},

doi = {10.1145/3676494},

issue_date = {September 2024},

journal = {Proceedings of the ACM on Human-Computer Interaction},

keywords = {biometrics, facial authentication, plausible deniability},

month = sep,

note = {dietz2024mobilehci},

number = {MHCI},

numpages = {13},

publisher = {Association for Computing Machinery},

timestamp = {2024.10.27},

title = {{Delusio - Plausible Deniability For Face Recognition}},

url = {http://www.florian-alt.org/unibw/wp-content/publications/dietz2024mobilehci.pdf},

volume = {6},

year = {2024},

} S. Prange, P. Knierim, G. Knoll, F. Dietz, A. D. Luca, and F. Alt. “I do (not) need that Feature!” – Understanding Users’ Awareness and Control of Privacy Permissions on Android Smartphones. In Twentieth Symposium on Usable Privacy and Security (SOUPS’24), USENIX, Philadelphia, PA, USA, 2024.

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

We present the results of the first field study (N = 132 ) investigating users’ (1) awareness of Android privacy permissions granted to installed apps and (2) control behavior over these permissions. Our research is motivated by many smartphone features and apps requiring access to personal data. While Android provides privacy permission management mechanisms to control access to this data, its usage is not yet well understood. To this end, we built and deployed an Android application on participants’ smartphones, acquiring data on actual privacy permission states of installed apps, monitoring permission changes, and assessing reasons for changes using experience sampling. The results of our study show that users often conduct multiple revocations in short time frames, and revocations primarily affect rarely used apps or permissions non-essential for apps’ core functionality. Our findings can inform future (proactive) privacy control mechanisms and help target opportune moments for supporting privacy control.

@InProceedings{prange2024soups,

author = {Sarah Prange AND Pascal Knierim AND Gabriel Knoll AND Felix Dietz AND Alexander De Luca AND Florian Alt},

booktitle = {{Twentieth Symposium on Usable Privacy and Security}},

title = {{``I do (not) need that Feature!'' – Understanding Users’ Awareness and Control of Privacy Permissions on Android Smartphones}},

year = {2024},

address = {Philadelphia, PA, USA},

month = aug,

note = {prange2024soups},

publisher = {USENIX},

series = {SOUPS'24},

abstract = {We present the results of the first field study (N = 132 ) investigating users’ (1) awareness of Android privacy permissions granted to installed apps and (2) control behavior over these permissions. Our research is motivated by many smartphone features and apps requiring access to personal data. While Android provides privacy permission management mechanisms to control access to this data, its usage is not yet well understood. To this end, we built and deployed an Android application on participants’ smartphones, acquiring data on actual privacy permission states of installed apps, monitoring permission changes, and assessing reasons for changes using experience sampling. The results of our study show that users often conduct multiple revocations in short time frames, and revocations primarily affect rarely used apps or permissions non-essential for apps’ core functionality. Our findings can inform future (proactive) privacy control mechanisms and help target opportune moments for supporting privacy control.},

timestamp = {2024.08.11},

url = {http://www.florian-alt.org/unibw/wp-content/publications/prange2024soups.pdf},

} E. Bouquet, S. Von Der Au, C. Schneegass, and F. Alt. Coar-tv: design and evaluation of asynchronous collaboration in ar-supported tv experiences. In Proceedings of the 2024 acm international conference on interactive media experiences (IMX ’24), Association for Computing Machinery, New York, NY, USA, 2024, p. 231–245. doi:10.1145/3639701.3656320

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

Television has long since been a uni-directional medium. However, when TV is used for educational purposes, like in edutainment shows, interactivity could enhance the learning benefit for the viewer. In recent years, AR has been increasingly explored in HCI research to enable interaction among viewers as well as viewers and hosts. Yet, how to implement this collaborative AR (CoAR) experience remains an open research question. This paper explores four approaches to asynchronous collaboration based on the Cognitive Apprenticeship Model: scaffolding, coaching, modeling, and collaborating. We developed a pilot show for a fictional edutainment series and evaluated the concept with two TV experts. In a wizard-of-oz study, we test our AR prototype with eight users and evaluate the perception of the four collaboration styles. The AR-enhanced edutainment concept was well-received by the participants, and the coaching collaboration style was perceived as favorable and could possibly be combined with the modeling style.

@InProceedings{bouquet2024imx,

author = {Bouquet, Elizabeth and Von Der Au, Simon and Schneegass, Christina and Alt, Florian},

booktitle = {Proceedings of the 2024 ACM International Conference on Interactive Media Experiences},

title = {CoAR-TV: Design and Evaluation of Asynchronous Collaboration in AR-Supported TV Experiences},

year = {2024},

address = {New York, NY, USA},

note = {bouquet2024imx},

pages = {231–245},

publisher = {Association for Computing Machinery},

series = {IMX '24},

abstract = {Television has long since been a uni-directional medium. However, when TV is used for educational purposes, like in edutainment shows, interactivity could enhance the learning benefit for the viewer. In recent years, AR has been increasingly explored in HCI research to enable interaction among viewers as well as viewers and hosts. Yet, how to implement this collaborative AR (CoAR) experience remains an open research question. This paper explores four approaches to asynchronous collaboration based on the Cognitive Apprenticeship Model: scaffolding, coaching, modeling, and collaborating. We developed a pilot show for a fictional edutainment series and evaluated the concept with two TV experts. In a wizard-of-oz study, we test our AR prototype with eight users and evaluate the perception of the four collaboration styles. The AR-enhanced edutainment concept was well-received by the participants, and the coaching collaboration style was perceived as favorable and could possibly be combined with the modeling style.},

doi = {10.1145/3639701.3656320},

isbn = {9798400705038},

keywords = {AR, collaboration, edutainment, interactive television, mobile},

location = {Stockholm, Sweden},

numpages = {15},

timestamp = {2024.06.10},

url = {http://florian-alt.org/unibw/wp-content/publications/bouquet2024imx.pdf},

} S. D. Rodriguez, P. Chatterjee, A. D. Phuong, F. Alt, and K. Marky. Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ’24), Association for Computing Machinery, New York, NY, USA, 2024. doi:10.1145/3613904.3642863

[BibTeX] [Abstract] [PDF] [Video]

[BibTeX] [Abstract] [PDF] [Video]

This paper explores how personal attributes, such as age, gender, technological expertise, or “need for touch”, correlate with people’s preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants’ preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants’ perceptions of the established tangible privacy mechanisms were their “need for touch” and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.

@InProceedings{delgado2024chi,

author = {Sarah Delgado Rodriguez AND Priyasha Chatterjee AND Anh Dao Phuong AND Florian Alt AND Karola Marky},

booktitle = {{Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems}},

title = {{Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms}},

year = {2024},

address = {New York, NY, USA},

note = {delgado2024chi},

publisher = {Association for Computing Machinery},

series = {CHI ’24},

abstract = {This paper explores how personal attributes, such as age, gender, technological expertise, or “need for touch”, correlate with people’s preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants’ preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants’ perceptions of the established tangible privacy mechanisms were their “need for touch” and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.},

doi = {10.1145/3613904.3642863},

isbn = {979-8-4007-0330-0/24/05},

location = {Honolulu, HI, USA},

timestamp = {2024.05.16},

url = {http://florian-alt.org/unibw/wp-content/publications/delgado2024chi.pdf},

video = {delgado2024chi},

} F. Alt, P. Knierim, J. Williamson, and J. Paradiso. The Pervasive Multiverse. Ieee pervasive computing, vol. 23, iss. 1, p. 7–9, 2024. doi:10.1109/MPRV.2024.3385528

[BibTeX] [Abstract] [PDF]

[BibTeX] [Abstract] [PDF]

The pervasive multiverse, an interconnected web of diverse and dynamic digital landscapes, promises to redefine our understanding of computing’s reach and impact. Hereby, it extends beyond the traditional boundaries of pervasive computing: digital ecosystems seamlessly intertwine with the physical, creating an immersive and interconnected experience across devices, contexts, and users.

@Article{alt2024ieeepvcsi,

author = {Alt, Florian and Knierim, Pascal and Williamson, Julie and Paradiso, Joe},

journal = {IEEE Pervasive Computing},

title = {{The Pervasive Multiverse}},

year = {2024},

issn = {1536-1268},

month = jun,

note = {alt2024ieeepvcsi},

number = {1},

pages = {7–9},

volume = {23},

abstract = {The pervasive multiverse, an interconnected web of diverse and dynamic digital landscapes, promises to redefine our understanding of computing's reach and impact. Hereby, it extends beyond the traditional boundaries of pervasive computing: digital ecosystems seamlessly intertwine with the physical, creating an immersive and interconnected experience across devices, contexts, and users.},

address = {USA},

doi = {10.1109/MPRV.2024.3385528},

issue_date = {Jan.-March 2024},

numpages = {3},

publisher = {IEEE Educational Activities Department},

timestamp = {2024.05.16},

url = {https://doi.org/10.1109/MPRV.2024.3385528},

} K. Marky, A. Stöver, S. Prange, K. Bleck, P. G. V. Zimmermann, F. Müller, F. Alt, and M. Mühlhäuser. Decide Yourself or Delegate – User Preferences Regarding the Autonomy of Personal Privacy Assistants in Private IoT-Equipped Environments. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’24), Association for Computing Machinery, New York, NY, USA, 2024. doi:10.1145/3613904.3642591

[BibTeX] [Abstract] [PDF] [Video]

[BibTeX] [Abstract] [PDF] [Video]